How To Convert PDFs to Images for ML Projects Using Ghostscript and Multiprocessing

TLDR; Run the following Ghostscript command to convert a pdf file to a series of png files.

gs -dNOPAUSE -sDEVICE=png16m -r600 -sOutputFile=document-%02d.png "document.pdf" -dBATCH

PDF files frequently contain data (text, images, tables, logs etc) valuable for training machine learning algorithms. However, most ML models are designed to process datasets (training or inference) represented as RGB images (JPEG, PNG), text, tabular data or combinations of these base modalities. A first step in utilizing pdf files for machine learning is to convert PDF files to images and then explore subsequent tasks (e.g. image classification, object detection, text extraction, etc).

I recently had to assemble a dataset of images from PDF files and this post documents the process I followed - Ghostscript!

Ghostscript

Ghostscript is an interpreter for PostScript and Portable Document Format (PDF) files. Ghostscript consists of a PostScript interpreter layer, and a graphics library. The graphics library is shared with all the other products in the Ghostscript family, so all of these technologies are sometimes referred to as Ghostscript, rather than the more correct GhostPDL.

If you are on a Mac, Ghostscript is installed by default (included in the Apple OS X package). On other platforms, follow the installation guide here. Verify that Ghostscript is installed and working (available on your path) by running the following command gs on your terminal.

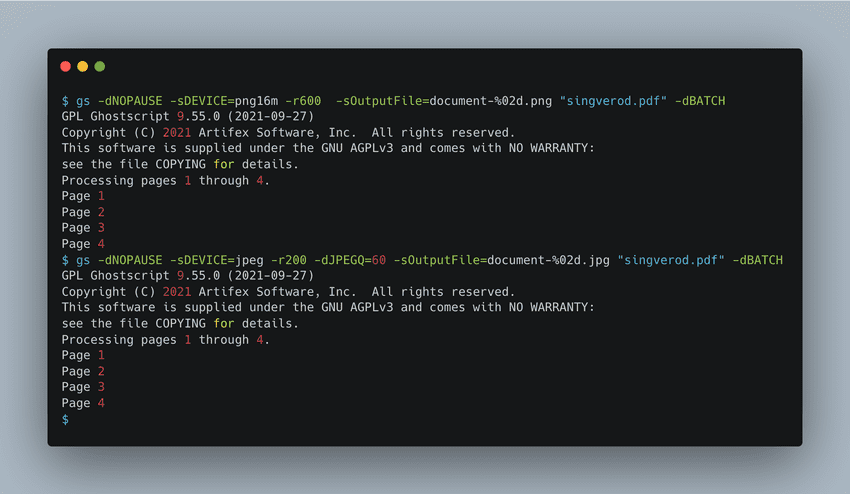

Ghostscript from the command line

Once Ghostscript is installed, you can run the following script from the command line to convert a pdf file to a series of images:

PNG

gs -dNOPAUSE -sDEVICE=png16m -r600 -sOutputFile=document-%02d.png "document.pdf" -dBATCH

JPG

gs -dNOPAUSE -sDEVICE=jpg -r200 -dJPEGQ=60 -sOutputFile=document-%02d.jpg "document.pdf" -dBATCH

The scripts above will take the specified pdf document.pdf, convert to a series of images and save them to the current directory. -dNOPAUSE tells Ghostscript to not pause after each page is rendered. -r600 specifies the resolution (dpi) of the output images. -sOutputFile=document-%02d.png specifies the output file name and the %02d is a placeholder for the page number.-sDEVICE=png16m and -sDEVICE=jpg specifies the output format.

Ghostscript in Python

We take the above script and convert it to a Python script using subprocess.

import osimport subprocess# make directory named outputoutput_dir = "output"os.makedirs(output_dir, exist_ok=True)file_path = "pdf/document.pdf"file_name = os.path.basename(file_path)file_name = file_name.split(".")[0]# execute Ghostscript command, print resultsresults = subprocess.run(["gs", "-dNOPAUSE", "-sDEVICE=png16m", "-r600", f"-sOutputFile={output_dir}/{file_name}-%02d.png", f"{file_path}", "-dBATCH"], stdout=subprocess.PIPE)print(results.stdout.decode("utf-8"))

Convert all PDFs in a directory

Similarly, you can walk through a directory of pdf files and convert them to images.

import osimport subprocessfrom tqdm import tqdm # progress bardef convert_pdf(file_path, output_dir, resolution=400):file_name = os.path.basename(file_path)file_name = file_name.split(".")[0]# execute Ghostscript command, print resultsresults = subprocess.run(["gs", "-dNOPAUSE", "-sDEVICE=png16m", f"-r{resolution}", f"-sOutputFile={output_dir}/{file_name}-%02d.png", f"{file_path}", "-dBATCH"], stdout=subprocess.PIPE)# make directory named outputoutput_dir = "output"os.makedirs(output_dir, exist_ok=True)# walk through folder named samp, convert all pdf files to pngfor root, dirs, files in os.walk("samp"):for file in tqdm(files):if file.endswith(".pdf"):file_path = os.path.join(root, file)convert_pdf(file_path, output_dir)##print number of images in output directoryprint(f"{len(os.listdir(output_dir))} images in output directory")

and here are the results:

(victors):pdftoimg#: python pdf_img.py100%|███████████████████████████████████| 70/70 [01:45<00:00, 1.50s/it]192 images in output directory

All well and good! We now have a series of images in the output directory. However, each pdf file takes about 1.5 seconds to convert each document. 1 minute and 45 seconds to convert 70 documents (note that conversion speed depends on the resolution parameter i.e., reducing your resolution will lead to faster conversion).

When the goal is to convert hundreds of thousands of documents, this can be slow. Hey multiprocessing!

Ghostscript with Python and multiprocessing

We can use the python multiprocessing library to speed up the conversion process. We can define a pool of processes and use the map function to run the conversion on each document in parallel. Luckily, converting files is an embarrassingly parallel task where all of the data and logic to complete the task can be encapsulated in a single function without any shared state.

from multiprocessing import Poolimport osimport subprocessimport timefile_list = []output_dir = "output_zip"pdf_directory = "samp"start_time = time.time()os.makedirs(output_dir, exist_ok=True)# get list of files in pdf_directory directoryfor root, dirs, files in os.walk(pdf_directory):for file in (files):if file.endswith(".pdf"):file_path = os.path.join(root, file)file_list.append(file_path)def convert_pdf(file_path, output_dir, resolution=400):file_name = os.path.basename(file_path)file_name = file_name.split(".")[0]# execute Ghostscript command, print resultssubprocess.run(["gs", "-dNOPAUSE", "-sDEVICE=png16m", f"-r{resolution}", f"-sOutputFile={output_dir}/{file_name}-%02d.png", f"{file_path}", "-dBATCH"], stdout=subprocess.PIPE)def process_pdf(args):file_path, i = argsconvert_pdf(file_path, output_dir, resolution=400)if __name__ == "__main__":# create pool of threads to process each pdf in parrallelnum_processes = 30pool = Pool(processes=(num_processes))pool.map(process_pdf, zip(file_list, range(len(file_list))))pool.close()print(f"Last file completed in {time.time() - start_time} seconds")

and the output ..

(victors):pdftoimg#: python pdf_img_multiproc.pyLast file completed in 15.732686758041382 seconds

We have shaved our time down from 1 minute 45 seconds to 15.7 seconds (6.7x speed up).

And that's it! Happy data generating!

Introducing Peacasso: A UI Interface for Generating AI Art with Latent Diffusion Models (Stable Diffusion)

Introducing Peacasso: A UI Interface for Generating AI Art with Latent Diffusion Models (Stable Diffusion) Signature Image Cleaning with Tensorflow 2.0 - TF.Data and Autoencoders

Signature Image Cleaning with Tensorflow 2.0 - TF.Data and Autoencoders ART + AI — Generating African Masks using (Tensorflow and TPUs)

ART + AI — Generating African Masks using (Tensorflow and TPUs) How to Speed Up Neural Network Training with Intel's Gaudi HPUs. A Tensorflow 2.0 Object Detection Example

How to Speed Up Neural Network Training with Intel's Gaudi HPUs. A Tensorflow 2.0 Object Detection Example Latent Diffusion Models: Components and Denoising Steps

Latent Diffusion Models: Components and Denoising Steps Designing Anomagram

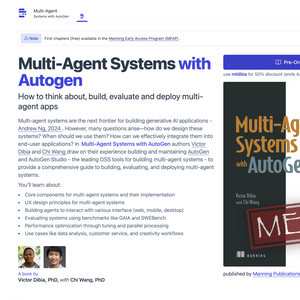

Designing Anomagram Announcing A New Book - Designing Multi-Agent Systems

Announcing A New Book - Designing Multi-Agent Systems