How to Speed Up Neural Network Training with Intel's Gaudi HPUs. A Tensorflow 2.0 Object Detection Example

Source: Techcrunch

Source: TechcrunchMove over TPUs, say hello to HPUs - Habana Processing Units, from Habana Labs!

What do GPUs, TPUs and HPUs have in common? They are all hardware accelerators - multicore processors that can be utilized (with special software) to speed up the execution of programs (in this case tensor computation needed for training neural networks). In many cases, the accelerators can be chained together (with some small cordination overhead) where programs are distributed across accelerators in parrallel, resulting in faster training.

Over the last few years, there has been intense research and development in this area with emerging standards and platforms which you might already be familiar with e.g., NVIDIA's GPU series and software, Google's TPUs. More recently, Intel has released the Gaudi platform architecture - and this post describes my experience trying it out.

What is the Habana Gaudi Processor?

The TPC core natively supports the following data types: FP32, BF16, INT32, INT16, INT8, UINT32, UINT16 and UINT8.

The Gaudi memory architecture includes on-die SRAM and local memories in each TPC. In addition, the chip package integrates four HBM devices, providing 32 GB of capacity and 1 TB/s bandwidth. The PCIe interface provides a host interface and supports both generation 3.0 and 4.0 modes.

Gaudi is the first DL training processor that has integrated RDMA over Converged Ethernet (RoCE v2) engines on-chip. With bi-directional throughput of up to 2 TB/s, these engines play a critical role in the inter-processor communication needed during the training process. This native integration of RoCE allows customers to use the same scaling technology, both inside the server and rack (scale-up), as well as to scale across racks (scale-out). These can be connected directly between Gaudi processors, or through any number of standard Ethernet switches.

The Habana Developer Experience. Is it Worth it?

As an ML engineer, you are probably interested in approaches that save you time and money while running ML experiments. At the same time, you want to spend the bulk of your time iterating on your model as opposed to debugging the tool/platform/framework.

Habana recognizes this need and has done a few things IMO that address this:

Standardized on docker containers for delivering sdk updates: This way, you avoid all the issues with installing drivers, libraries matching version etc.

Partnering with cloud solution providers to make hardware setup effortless. (currently AWS).

Provide the SynapseAI SDK for efficient execution of neural network topologies on Gaudi hardware. The SynapseAI TensorFlow/PyTorch bridge identifies the subset of the framework’s computation graph that can be accelerated by Gaudi. For performance optimization, the compilation recipe is cached for future use. Operators that are not supported by Gaudi are executed on the CPU. Habana Gaudi also provides templates for developing custom kernels which can then be used in custom ops within your favorite framework.

Provide a growing list of reference models and training implementation code to illustrate best practices

Provide multiple methods to achieve distributed training.

TensorFlow: Gaudi scaling with data parallelism in the TensorFlow framework is achieved using two distinct methods - Using Habana Horovod and

HPUStrategyintegrated withtf.distribute API.PyTorch: Gaudi scaling with data parallelism in the PyTorch framework is achieved using

torch.distributedpackage usingDDP- Distributed Data Parallel. DDP is a widely adopted single-program multiple-data training paradigm. WithDDP, the model is replicated on every process, and every model replica will be fed with a different set of input data samples. DDP takes care of gradient communication to keep model replicas synchronized and overlaps it with the gradient computations to speed up training.

AMazon DL1 Instance

To get started, here are two simple steps:

- Setup and launch a Habana base AMI on AWS. This lets you launch an EC2 DL1 large instance (8 Habana accelerator cards).

Hint: For users new to AWS, remember to allocate a significant amount of disc space to your Habana DL1 instance; the default disc space is 8GB which is insufficient for most scenarios. Also spend some time getting familiar with Docker.

- Pull and Run the Habana Docker Image. While the DL1 instance, provides access to the Habana Gaudi accelerator cards, the docker images provided by Habana set up the right software. Select the Tensorflow docker image which is what we will be using.

Follow instructions on the installer page to run the container. The rest of the steps must be run in the docker container.

Training an Object Detection Model on Habana Gaudi with Tensorflow

Habana labs provide reference implementations of many computer vision tasks in both the Pytorch and Tensorflow frameworks. These references are valuable as they already take care of the hard work required to ensure most of the ML computations (Ops) in both frameworks efficiently run on the HPU cards. For this experiment, we will use the RetinaNet Tensorflow Implementation.

In the Habana Tensorflow container, clone the RetinaNet model and install its requirements.

Also, navigate to the /official/vision/beta folder. This folder contains the primary train.py script which we will reference later. We will also download the dataset to this directory and assume this as the location of the dataset.

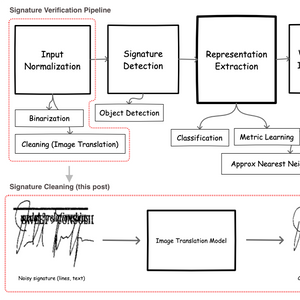

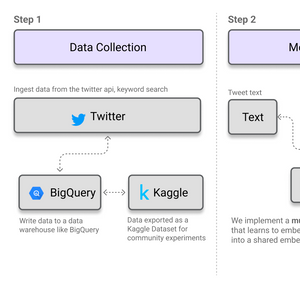

Download the SignverOD Dataset from Kaggle

We will download the SignverOD dataset which is made freely available on Kaggle. Note that you will need a kaggle account to use the api. Alternatively, you can manually download the dataset and copy it to your habana docker instance.

pip install kaggle

setup your kaggle username and token in the environment

export KAGGLE_USERNAME=yourusernameexport KAGGLE_KEY=xxxxxxxxxxxxxx

download the dataset

kaggle datasets download -d victordibia/signverodunzip signverod.zip

This will download the SignverOD dataset which contains a tfrecords folder which train and eval data shards.

Train RetinaNet on a Single Gaudi Card

The following script will train the retinanet model on a single Gaudi card

python3 train.py --experiment=retinanet_resnetfpn_coco --model_dir=output --mode=train --config_file=configs/experiments/retinanet/config_beta_retinanet_1_hpu_batch_8.yaml --params_override="{task: {init_checkpoint: gs://cloud-tpu-checkpoints/vision-2.0/resnet50_imagenet/ckpt-28080, train_data:{input_path: tfrecords/train*}, validation_data: {input_path: tfrecords/eval*} }}"

Train RetinaNet on 8 Gaudi Cards

The following script will train the retinanet model on all 8 Gaudi cards available in the DL1 instance

mpirun --allow-run-as-root --tag-output --merge-stderr-to-stdout --output-filename /root/tmp/retinanet_log --bind-to core --map-by socket:PE=4 -np 8 python3 train.py --experiment=retinanet_resnetfpn_coco --model_dir=~/tmp/retina_model --mode=train_and_eval --config_file=configs/experiments/retinanet/config_beta_retinanet_8_hpu_batch_64.yaml --params_override="{task: {init_checkpoint: gs://cloud-tpu-checkpoints/vision-2.0/resnet50_imagenet/ckpt-28080, train_data:{input_path: tfrecords/train*}, validation_data: {input_path: tfrecords/eval*} }}"

The resulting trained model and training params (e.g., for visualization in Tensorboard) are in the output directory and can then be copied, exported as saved_models and used with the SignVer library.

Conclusion

In my experience, it was relatively easy train an object detection model using my own custom data and a reference model provided by Habana. I also experimented with training a custom Tensorflow 2.0 keras model (convolutional autoencoder) which was fairly easy - all that needs to be done is import the load_habana_module() and all supported Ops are run on a single HPU card.

Single and Distributed Training via tf.distribute.Strategy

from habana_frameworks.tensorflow import load_habana_moduleload_habana_module()

Attempting to use mutliple cards simultaneously is more involved and requires some additional work. If you are using Tensorflow, the tf.distribute HPUStrategy appears to offer the best developer experience so far.

from habana_frameworks.tensorflow.distribute import HPUStrategystrategy = HPUStrategy()# For use with Keraswith strategy.scope():model = ...model.compile(...)model.fit(...)

Thoughts on Improving the Developer Experience

IMO, one way to further improve the developer experience is to explore an SDK design (and corresponding examples) that demonstrate how the developer can prototype their training scripts locally on a CPU without errors, and the same code automatically works in an environment with Gaudi cards.

E.g., the habana_frameworks library can be installed on a CPU and supports CPU abstractions when a HPU is unavailable.

I understand that this is non-trivial, but with the right set of checks, error messages and examples, it could be game a changer especially for onboarding new developers. This design could provide developers freedom to carefully port and test their code locally (minimizing costs!) and then run training on the DL1 instance.

The space of hardware accelerators for machine learning is growing rapidly. IMO developer SDKs with good abstractions and good developer UX will definitely shape the future of this space.

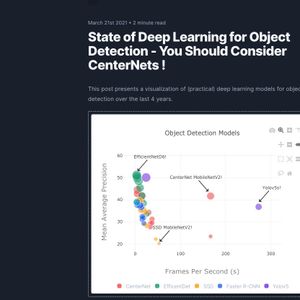

State of Deep Learning for Object Detection - You Should Consider CenterNets!

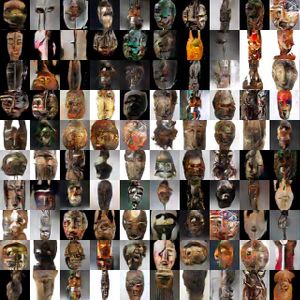

State of Deep Learning for Object Detection - You Should Consider CenterNets! ART + AI — Generating African Masks using (Tensorflow and TPUs)

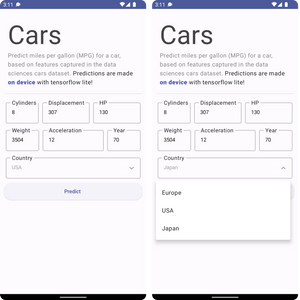

ART + AI — Generating African Masks using (Tensorflow and TPUs) How to Build An Android App and Integrate Tensorflow ML Models

How to Build An Android App and Integrate Tensorflow ML Models Top 10 Machine Learning and Design Insights from Google IO 2021

Top 10 Machine Learning and Design Insights from Google IO 2021 How to Finetune BERT for Text Classification (HuggingFace Transformers, Tensorflow 2.0) on a Custom Dataset

How to Finetune BERT for Text Classification (HuggingFace Transformers, Tensorflow 2.0) on a Custom Dataset Signature Image Cleaning with Tensorflow 2.0 - TF.Data and Autoencoders

Signature Image Cleaning with Tensorflow 2.0 - TF.Data and Autoencoders U2Net Going Deeper with Nested U-Structure for Salient Object Detection | Paper Review

U2Net Going Deeper with Nested U-Structure for Salient Object Detection | Paper Review How to Build MultiModal Recommender Systems with Tensorflow

How to Build MultiModal Recommender Systems with Tensorflow