Signature Image Cleaning with Tensorflow 2.0 - TF.Data and Autoencoders

TLDR: We train a deep convolutional autoencoder to remove noise from signature images. Want to try it out? A link to a Kaggle Notebook is available below.

As part of the overall task of signature verification, signature cleaning seeks to automatically remove background noise, text, lines etc that often accompany signatures in the wild. Consider we are building a system for signature verification on signed contracts; we have records for registered users (name, email and atleast one reference document they have signed). Our task is to verify that a new submitted document which claims to be signed by a registered user, was indeed signed by that user. To achieve this goal we need to solve a few problems - first, we need to extract signatures from the submitted document, remove any background noise or artifacts and then compare the cleaned signature to our reference signature on record.

In this notebook, we will explore how we can the second task - how to implement signature cleaning using an image translation model (a convolutional autoencoder). Here is a list of what you will learn and accomplish:

- Problem Framing: Learn how to frame signature image cleaning as a machine learning task and walk through a pros and cons of multiple approaches.

- Synthetic Data Generator: Learn how to creatively design a synthetic data generator (simulator) useful for training a model that works well in practice.

- Data Generator with TF.Data: Learn how to write a tf.data pipeline that yields batches of samples from your synthetic data generator, for efficient use during model training.

- Training and Evaluation: Build, train and evaluate an autoencoder model for signature cleaning that utilizes your tf.data pipeline.

Problem Framing: Signature Cleaning as Image Translation

We can frame the task of signature cleaning as as an image translation problem where our goal is to learn a transformation from a source domain (noisy signatures) to a target domain (clean signatures). Two high level appoaches are posible - paired supervised image translation and unpaired image translation.

Paired Supervised Image Translation

If we have access to pairs of clean + noisy samples, we can apply some model architecture to learn a translation function across these pairs.

Candidate Models:

- Point Estimate Models: Point estimate models learn to model a translation function given an input. A familiar example of models in this category is a denoising autoencoder that learns to remove random noise from images. Other related models include a Unet model (learns to output cleaned image instead of segmentation mask) and U2Net models.

- Generative Models: Generative models aim to model the distribution of the target domain (conditioned on some input). A classic example is a Variational Autoencoder or a conditional GAN. Both approaches learn the target distribution from which we can draw samples conditioned on a source domain input. This approach can be particularly valuable if our goal is to generate new unseen samples clean signature. In practice, these models are morecomplicated to implement, sometimes yield fuzzy or unrealistic results and or include unexpected artifacts. Pix2PixGAN

Perhaps the biggest challenge to a paired supervised image translation approach is that we need example aligned pairs of the source and target domain for training. This can be very expensive to curate and acquire.

Unpaired Image Translation

This explores the use of unpaired examples to learn a mapping from clean to noisy. It assumes we have access to a large dataset of the source domain (noisy images), and a separate large datasets of the target domain (clean images). This method requires less data curation effort, but may not result in high quality translations (this is mainly because there is no explicit supervisory signal, making the problem underconstrained).

Candidate Models:

- CycleGAN is perhaps a classic example. It helps address the under constrained problem by introducing a cycle consistency loss.

Which to Use?

An unpaired strategy holds promise i.e., it is relatively easier to assemble a large unlabelled set of images from source and target domains than to manually curate paired examples. However, the unpaired problem is underconstrained, and can suffer several known problems of GANs.limitations. Existing work also indicate that a paired approach can result in better translation results (they show how a UNet based autoencoder is 2.2x faster than a CycleGAN approach and achieves better SSIM (4 points) and MSE values).

In our use case, we have some idea on the space of noise we want to remove and can explore a synthetic data generation process. E.g. we know that the kind of noise we are likely to see in live signature docs are mostly lines, dates, titles, names, salt and pepper noise etc. We can write a simulator that takes genuine real world signatures and randomly generates noisy pairs that juxtapose all of the noise artifacts we care about. Overall, it takes less effort to create this simulator compared to manually curating a clean vs noisy set.

A summary on pros and cons of both approaches:

Unpaired Translation

- Pros:

- Will benefit from the availability of large source (noisy) and target (clean) datasets

- Will naturally learn a diverse set of edge cases from large corpora

- Cons

- There will likely be artifacts in the resulting clean images (due to limitations of unpaired image translation methods)

- Can be challenging to improve/control the resulting model to address any identified failure cases.

Paired Translations via Simulations

-

Pros:

- Will work very well for covered edge cases. If the translation space is well defined and can be represented by rules in a simulator, this solution is sufficient.

- Can support rapid iterations and offline evaluation of multiple model architectures

- Simulator rules can be continuously updated to additional test cases as they are discovered

- We can generate 'infinite' training samples and train a model until convergence (i.e., it learns to solve the task given the examples generated)

-

Cons

- Simulator needs to be comprehensive and cover all edge cases

- Will not readily generalize to unseen cases. Perhaps a generative model is more useful here?

- Evaluating on simulator test cases does not tell us about the real world. We need to still design a test harness that efficiently helps us identify failure cases. Using this information, the simulator needs to be constantly updated to reduce any differences between the online distribution of noisy signatures and offline noisy signatures which the simulator generates.

In this work, we will take a paired data simulation approach. We will use the CEDAR dataset as our source of "genuine" images and create a simulator to generate noisy samples based on these samples.

Designing a Synthetic Paired Data Generator Function

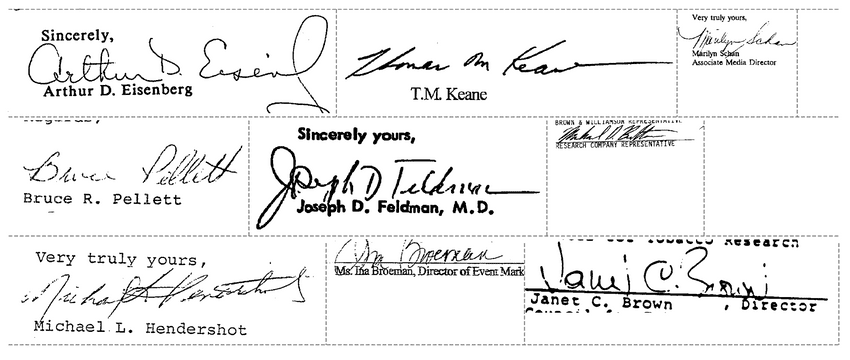

For this usecase, I have studied a variety of example signatures in the wild (see examples in the image above, sourced from the SignverOD dataset) and assembled the following set of rules to build the synthetic data generator:

Initial Rules

For each image, explore the following augmentations to produce a "noisy" version.

- Add lines

- Randomly select one of 3 different offsets - top, middle and bottom

- add slight slants to line

- Add multiple lines of varied width

- Add text

- Randomly select one of 9 fonts

- Randomly select words from MIT wordnet. Also add some primary words (e.g., yours sincerely) known to frequently occur as background text around signatures to increase the model's probability of removing those words. Also add random punctuations

- Place text at random height locations.

- Add salt and pepper noise to mirror signature thresholding and real world document scanning artifacts. This (as well as all of the other steps) turn out to be important for generalization!

Iterating on the Simulator After Reviewing Online Evaluations

Some updates to the simulator that were implemented after reviewing failure modes when tested online with signatures extracted from scanned documents in the wild.

- Increase line thickness ranges

- Add vertical lines to arbitraty y locations

- Compress/resize text to mirror live aspect rations. i.e., add text to original image and then resize

- Add more bold and italics text (earlier version did not priotitize this, leading to a model that did not remove bold text)

- Apply thresholding after the noise + text addition, not before. At test time, the model sees a thresholded image where there are no low intensity pixel. Thats makes for a significantly different distribution.

- Text aspect ratio: Neural nets typically require a fixed sized input, meaning signatures are resized to a square aspect ratio before being fed into a neural network. This aspect ration has to be mirrored by the simulator - i.e., the entire noisy image is resized after adding text.

Create a TF Dataset Loader that Uses The Generator Function

Addressing Potential Data Leakage in Constructing Train/Validation Sets

In the CEDAR dataset, there are signatures and forgeries of the same signer ... if we dont do a train/val split based on signatures or signers, there is a chance that the model sees the same structures in train and test which might cause it to "memorize" these structures and fail to perform as well on completely new datasets.

- Model learns to clean well for types of signers in dataset

- Since evaluation set has those same signatures, the model might use that infromation and show performance above what we would see in completely new datasets.

We select the ids of 10 signers (out of 55 signers), move their signatures and forgeries to a different folder

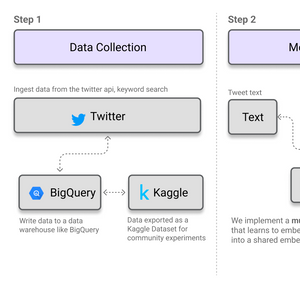

Create tf.Data Dataset from Tensor Slices

- Create train and validation set using list of train and validation images

- Apply a mapping function using tf.py_function that calls our paired data generator function

batch_size = 32train_ds = tf.data.Dataset.from_tensor_slices((train_images, train_images)).shuffle(5, seed=123).repeat(3).batch(batch_size)val_ds = tf.data.Dataset.from_tensor_slices((val_images, val_images)).batch(batch_size)def generate_pair(x):clean, aug = get_aug(x.numpy())return (aug, clean)train_ds = train_ds.map(lambda x, y: tf.py_function(generate_pair, [x], [tf.float32,tf.float32]))val_ds = val_ds.map(lambda x, y: tf.py_function(generate_pair, [x], [tf.float32,tf.float32]))

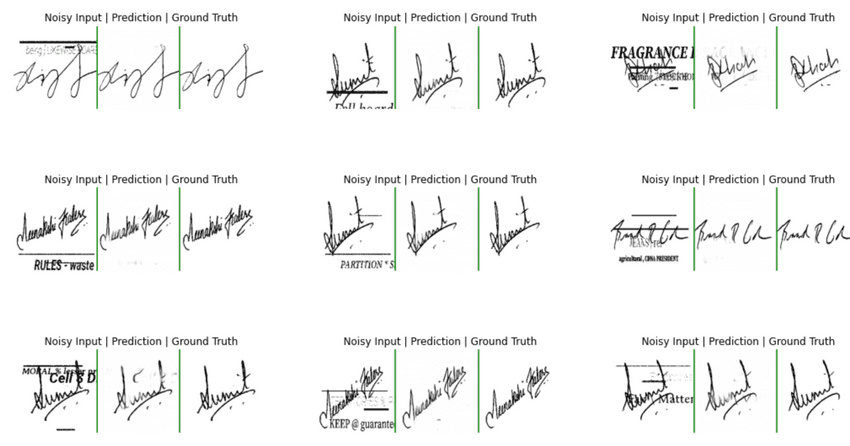

Visualize Data from Our tf.Data Pipeline

It is always a good idea to carefully visualize and review example data generated by our tf.data pipeline. Below we take a single batch from our pipelie and visualize pairs of noisy and clean images. Note that each time a sample is drawn, a random augmentation is applied.

Training a Convolutional Autoencoder Model

We implement a simple convolutional autoencoder model using 3 conv2D layers in the encoder and decoder. As a quick refresher, an autoencoder learns to map some input data to a lower dimension and then reconstruct the input from this lower dimension representation. In this case our goal is to reconstruct a "cleaned" version of the input, given the low dimension. We will add Dropout layers to reduce the chance of our model overfitting.

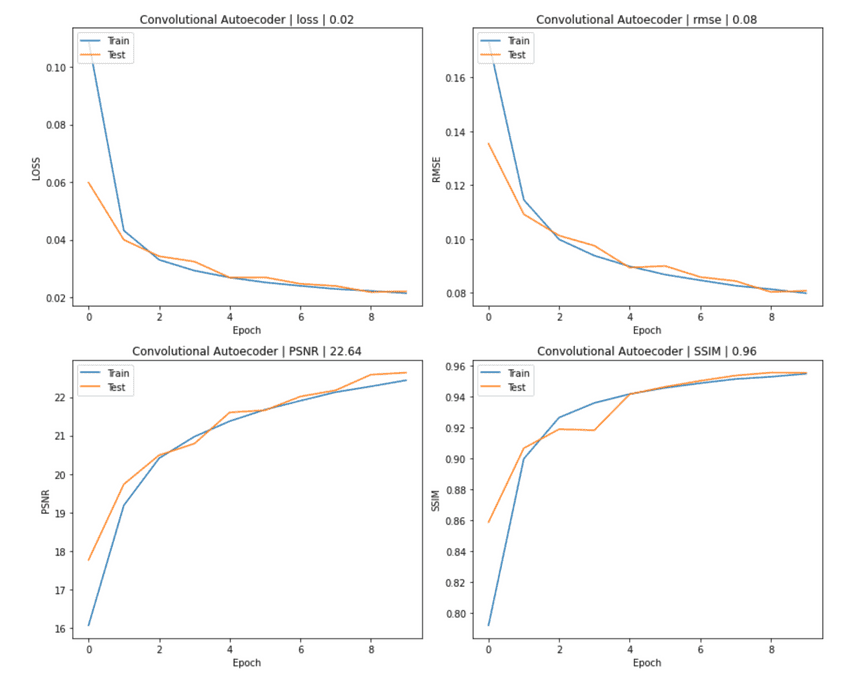

Model Evaluation - Image Similarity Metrics

To evaluate our model, we can leverage metrics for image quality assessment. That is, we want to assess the quality of a target (cleaned image), given some ground truth reference. In this example, we will explore 3 metrics for this task

- Root Mean Squared Error (RMSE). Implemented using tf.image.psnr. Implemented using tf.keras.metrics.RootMeanSquaredError

- Peak Signal to Noise Ration (PSNR)

- Structural Similarity index (SSIM). Implemented using tf.image.ssim

Training a Convolutional Autoencoder Model

We implement a simple convolutional autoencoder model using 3 conv2D layers in the encoder and decoder. As a quick refresher, an autoencoder learns to map some input data to a lower dimension and then reconstruct the input from this lower dimension representation. In this case our goal is to reconstruct a "cleaned" version of the input, given the low dimension. We will add Dropout layers to reduce the chance of our model overfitting.

Model Evaluation - Image Similarity Metrics

To evaluate our model, we can leverage metrics for image quality assessment. That is, we want to assess the quality of a target (cleaned image), given some ground truth reference. In this example, we will explore 3 metrics for this task

- Root Mean Squared Error (RMSE). Implemented using tf.image.psnr. Implemented using tf.keras.metrics.RootMeanSquaredError

- Peak Signal to Noise Ration (PSNR)

- Structural Similarity index (SSIM). Implemented using tf.image.ssim

Conclusion

In this notebook, we looked at approaches for cleaning images, how to generated paired data samples for this task and implemented an autoencoder which achieved 0.94 SSIM score (max of 1). The autoencoder does succeed at removing non-trivial noise and background in signatures (see examples in the visualize predictions section above ).

Next Steps / Extra Credit

-

Extend the base autoencoder model. Hint (try increasing the number of layers)

-

Try out additional models architectures e.g., Unet. UNet-like models with residual connections learn low level multiscale features that can be useful for a task like noise artifact removal. Hint: experiment with a vanilla Unet, see reference implementation by Karol Zak. Also, what happens if we initialize our model using pretrained imagenet model weights?

-

Extend the data generator to include new edge cases

-

Explore offline evaluations on a set of noisy signatures extracted from signatures in the wild. Hint: Use the signver library to extract noisy signatures from documents and apply your model in cleaning them.

-

Cleaning signatures is just one step in the signature verification process, other tasks such as signature detection, and representation extraction can benefit from deep learning models. Hint: see this blog post for more info.

ART + AI — Generating African Masks using (Tensorflow and TPUs)

ART + AI — Generating African Masks using (Tensorflow and TPUs) How to Finetune BERT for Text Classification (HuggingFace Transformers, Tensorflow 2.0) on a Custom Dataset

How to Finetune BERT for Text Classification (HuggingFace Transformers, Tensorflow 2.0) on a Custom Dataset State of Deep Learning for Object Detection - You Should Consider CenterNets!

State of Deep Learning for Object Detection - You Should Consider CenterNets! How to Build MultiModal Recommender Systems with Tensorflow

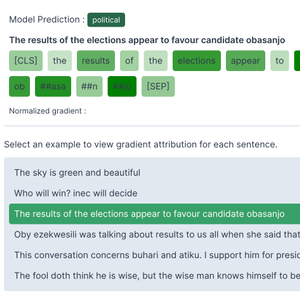

How to Build MultiModal Recommender Systems with Tensorflow How to Implement Gradient Explanations for a HuggingFace Text Classification Model (Tensorflow 2.0)

How to Implement Gradient Explanations for a HuggingFace Text Classification Model (Tensorflow 2.0) How to Speed Up Neural Network Training with Intel's Gaudi HPUs. A Tensorflow 2.0 Object Detection Example

How to Speed Up Neural Network Training with Intel's Gaudi HPUs. A Tensorflow 2.0 Object Detection Example Introducing Peacasso: A UI Interface for Generating AI Art with Latent Diffusion Models (Stable Diffusion)

Introducing Peacasso: A UI Interface for Generating AI Art with Latent Diffusion Models (Stable Diffusion) Real-Time High-Resolution Background Matting | Paper Review

Real-Time High-Resolution Background Matting | Paper Review