How to Build a Similar Posts Feature for Your Gatsby (JAMSTACK) Website using Machine Learning (Document Similarity)

If you have a Gatsby website (or a website built by a JAMSTACK static site generator such as Next, Nuxt, Jekyll, Hugo etc), one way to improve content discoverability, is to show a list of similar posts at the end of each page. We can cast this problem as a machine learning similarity search task -i.e., given a post (document), show me other posts that are similar to it.

Jamstack is a term that describes a modern web development architecture based on JavaScript, APIs, and Markup (JAM). Jamstack is an architecture designed to make the web faster, more secure, and easier to scale. It builds on many of the tools and workflows which developers love, and which bring maximum productivity. The core principles of pre-rendering, and decoupling, enable sites and applications to be delivered with greater confidence and resilience than ever before.

In this post we will explore a simple workflow for implementing this feature. Hint: the related posts section at the bottom of this blog post is powered by this workflow.

TLDR;

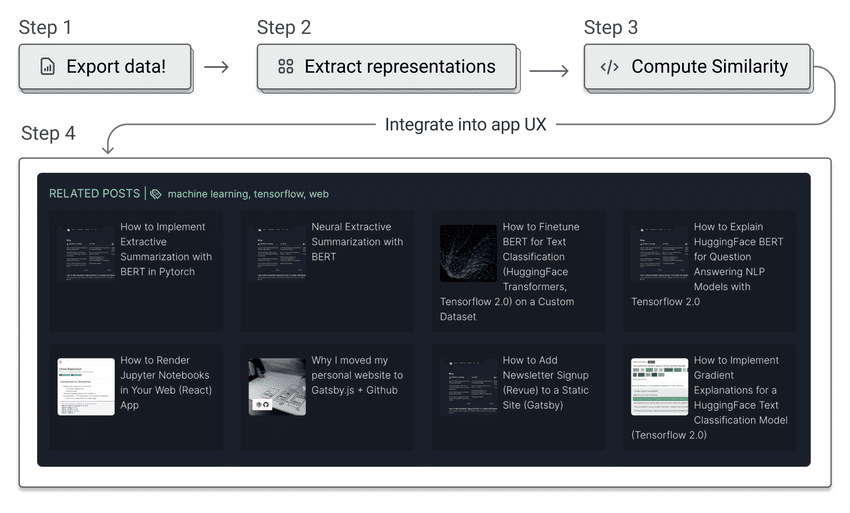

- Step 1: Export the text for each post in a format that can be loaded and processed with ease. Here, we will modify

gatsby-node.jsto export the text for each post in a JSON file. - Step 2: Extract representations for each post. The core idea here is to create vector representations of each document such that we can compute a similarity score. Here, we will write some python code that uses a a pre-trained model neural network model (Sentence BERT) to extract representations for each post.

- Step 3: Compute similarity scores. We will use the representations to compute similarity scores for each post and export a map data structure (post_slug -> [similar_posts]) in a JSON file.

- Step 4: Display results! C'est finis .

Let's get started.

But Why Use Machine Learning?

In practice, there are other ways to implement a similar posts feature. Before this effort, I had a heuristics-based approach where each post had manually assigned tags and I implemented similar posts as a list of posts with the same tags. This approach has several drawbacks - all posts had to be manually tagged, the tags had to be manually updated when a post was updated, the tags were not always semantically meaningful, the degree of relatedness was not well-captured in the list presented especially for posts with multiple tags etc. A word frequency approach (e.g., bag of words, TDIDF etc) can also be used to craft a similar posts feature and there is a nice gatsby plugin that explores this approach. Again, word frequency approaches have drawbacks - they do not capture semantics or context well (two posts might use similar words but have different meanings).

A machine learning approach can help us overcome these limitations. Specifically advances in neural language models (transformers) have shown state of the art performance of all natural language processing problems (e.g., text summarization, text classification, etc.). This is mainly because neural models learn semantically meaningful representations of text. Libraries like huggingface transformers have also removed barriers to entry for using these models.

TLDR; for NLP problems, you should start with a transformer model.

In this post, we will implement the model using python as most machine learning libraries are in python. While you can use a Tensorflow.js text model (e.g., the universal sentence encoder) in Node.js, the range of available pretrained models is limited.

Step 1: Export Posts (Get Your Data!!)

Consult your JAMStack framework to figure out how to export the text for each post in a format that can be loaded and processed with ease.

For Gatsby, depending on how your gatsby-node.js file is structured, the key step is to modify the createPages function to export the text for each post in a JSON file. Here, we will use the fs module to write data to a json file.

exports.createPages = async ({ graphql, actions }) => {const { createPage } = actionsconst result = await graphql(`query {allSamplePages {edges {node {slugtitleexcerpt(pruneLength: 2000)}}}}`)posts = result.data.allSamplePages.edgesfs.writeFile("static/posts.json", JSON.stringify(posts), "utf8", function (err) {if (err) {return console.log(err);}});

We want the excerpt, description, title, and slug. We will also export the first 2000 characters from each post as an excerpt.

Step 2: Extract Representations

We need to extract semantically meaningful representations of our text such that we can compute a similarity score. Several decisions need to be made here e.g., what model architecture do we use (e.g., BERT, RoBERTa etc), what finetuning strategy (e.g., finetuned on some task such as QA etc), what data do we use (e.g., do we use the title, description, entire post? )? etc.

Recall our goal is to get representations that lets us disambiguate similar posts from dissimilar posts. Given this goal, we ideally want a model that has been finetuned with a similar objective. Thus we can use SentenceBERT [^1].

SentenceTransformers is a Python framework for state-of-the-art sentence, text and image embeddings. The initial work is described in the paper Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. You can use this framework to compute sentence / text embeddings for more than 100 languages. These embeddings can then be compared e.g. with cosine-similarity to find sentences with a similar meaning. This can be useful for semantic textual similar, semantic search, or paraphrase mining. The framework is based on PyTorch and Transformers and offers a large collection of pre-trained models tuned for various tasks. Further, it is easy to fine-tune your own models.

SentenceBERT (SBERT) uses a siamese and triplet network structures to derive semantically meaningful sentence embeddings that can be compared using cosine-similarity. The training process explicity takes in pairs of sentences and tries to maximize the cosine similarity between the two sentences if they are semantically similar and minimize the cosine similarity if they are semantically dissimilar. This exercise in turn yields well calibrated cosine similarity scores compared to a conventional BERT model trained on the masked language modeling objective.

SentenceBERT is a good choice for several reasons:

- Provides well calibrated cosine similarity scores compared to a conventional BERT model trained on the masked language modeling objective.

- It is relatively small and can be easily run on consumer GPU hardware. For context the best performing sentence bert model is 420MB. This means it can be integrated into pipelines or run locally.

It is important to note that the quality of data used can affect performance. Ideally, we want text that is most representative of the core ideas of the post. In this example, we will concatenate the title, description, tags and excerpt (first 2000 characters) of each post. Note that transformer based models like BERT (and Sentence BERT) have a limit (max-token-length) on the maximum size of text they can process (typically 512 tokens for base models) and typically will truncate text to fit the their max token length.

Next, we install and use the sentence-transformers library (which is based on the Huggingface transformers library) in extracting representations (embeddings).

pip install -U sentence-transformers

from sentence_transformers import SentenceTransformer, utilsbert_model = SentenceTransformer('all-mpnet-base-v2')embeddings = model.encode(sentences, convert_to_tensor=True) # encode post to get embeddings

Step 3: Compute Similarity Scores

Here, we compute cosine similarity for all pairs of posts (40 * 40 posts). We then construct a score map data structure that maps each post to its top 10 most similar posts (sorted by similarity score). We can then export this map as a json file.

In the previous section, we mentioned how SentenceBERT is better suited to the task. To investigate this in more detail, we can plot a histogram of scores from all pairs of posts. As seen in the figure above, cosine similarity scores from a base BERT model are not well calibrated (saturated between 0.8 and 1 for all pairs of documents) - i.e., even dissimilar documents still have a relatively high similarity score (0.8). On the other hand the cosine similarity from a SentenceBERT model are well calibrated i.e., reasonably spread between 0 and 1 for all pairs of documents.

import jsonimport urllib.requestimport pandas as pdfrom sentence_transformers import SentenceTransformer, utilurl = 'https://victordibia.com/files/posts.json' # url to posts.json file.response = urllib.request.urlopen(url)data = json.loads(response.read())df = pd.DataFrame(data)df["content"] = df.title + " " + df.description + " " + df.excerptsbert_model = SentenceTransformer('all-mpnet-base-v2')def get_score_map(sentences, model, n=10):embeddings = model.encode(sentences, convert_to_tensor=True) # encode post to get embeddingscosine_scores = util.cos_sim(embeddings, embeddings) # compute cosine similarityscore_map = {} # data structure to store the resultsfor i in range(cosine_scores.shape[0]): # quadratic loop could be optimizedholder = []for j in range(cosine_scores.shape[1]):if i != j:holder.append({ "id": df.id[j], "image": df.image[j], "title": df.title[j], "score": float(cosine_scores[i][j].cpu().numpy()), "slug": df.slug[j]})holder = sorted(holder, key=lambda x: x['score'], reverse=True) # sort by scorescore_map[df.slug[i]] = holder[:n] # get top nreturn score_map, cosine_scores.cpu().numpy() # return score map and raw scoressbert_scoremap, sbert_scores = get_score_map(df.content, sbert_model)with open('similar.json', 'w') as fp:json.dump(sbert_scoremap, fp)

Hint: We can wrap this into a python script that updates similar.json each time a new post is added.

Step 4: Display Similar Posts

In this final step we can then load the map data structure and use it in gatsby to display similar posts. Specifically, for Gatsby, the trick will be to update gatsby-node.js and add a similarposts field to the data context [^2] that is sent to the template that renders your post page. This exact implementation might vary based on the structure of your web app.

And with these 4 steps, we are done! We now have a mechanism to show a list of similar posts for each post on our website. A quick eyeball test shows that the system is working well, already significantly better than the previous tag based system.

Final Thoughts

In this post, we started out with building a similar posts feature for a Gatsby website. We cast the problem as a machine learning similarity search task. We then used a pre-trained model (Sentence BERT) to extract representations for each post and computed similarity scores. We then exported the similarity scores as a map (post_slug -> [similar_posts]) and used it to display similar posts on each post page.

Some of the important notes (which may not be so obvious for beginners) are:

- System quality depends on data quality. The decision to include the post title, description, tags and then excerpt does impact how well the system works. These items contain dense, semantically meaningful information that characterize each document.

- Chose the right model architecture and finetuning strategy. In this case, we used a pre-trained model that was finetuned to yield well calibrated similarity scores. The plot of scores from BERT and Sentence BERT shows raw BERT is not as sensitive to semantic similarity as Sentence BERT and hence will have worse performance.

- The ML model is only useful because it solves a user (or business) problem. In this case, the model is used to solve the problem of helping users discover content on the website. We use the model to create a map data structure that maps each post to an ordered list of similar posts which we then leverage in theGatsby website build script. This sort of end to end systems thinking (i.e., how does the ML model fit in with the rest of the solution) is critical for building production systems. Knowing that we want to show details of the post informs the design of the data structure (e.g. each object for a post should contain the title, image and all other info needed to display the list etc)

Extra Credit (Considerations for Production)

If you are ML engineer exploring a similar project, using a pretrained model as described above is an excellent first step. But what are all the other things that we have ommitted that are critical for actual production systems. Let's take a look!

- Design an evalaution harness . Currently we verify the quality of the model by eyeballing the results. Ideally we want to curate a labelled test set e.g., for each post we have a list of similar posts labelled by a human. Next we select a metric (e.g., precision@k, recall@k, MAP@k etc) and compute the metric on the test set. This will give us a sense of how well the model is performing. We can then use this metric to compare different models and make decisions on which model to use.

- Optimize the model and aspects of the pipeline . With an evaluation harness in place, there are several things we can do. First, we may want to try out more complex models e.g., using the OpenAI embedding api (note that is is paid, and every time the similar posts are computed, some api costs are incurred). Next, we can finetune the model on a retrieval task where given a query, we want to retrieve the most relevant documents. We can also improve the model's performance via hyperparameter tuning during fintetuning. We can also explore methods to compress the model (e.g., quantization, pruning etc) to reduce the model size and improve inference speed. This will simplify deployment and reduce costs. Finally, we can also make the similarity computation more efficient (current implementation runs in quadratic time).

- Design a continuous deployment and monitoring system . We can do this by implmenting a pipeline that 1.) Computes an eval metric on a validation set (i.e., a held out subset of the training set) and then comparing the metric to a threshold for multiple model candidates. We can routinely update this validation set and trigger an alert if the model performance drops below the threshold. This is critical for production systems as we want to know if the model is performing as expected. 2.) Generates and exports the similar posts data structure using the best performing model on some cadence (e.g., each time a new post is added) 3.) Redeploys the website with the updated similar posts data.

References

[^1]: Reimers, Nils, and Iryna Gurevych. "Sentence-bert: Sentence embeddings using siamese bert-networks." arXiv preprint arXiv:1908.10084 (2019). [^2]: Creating and Modifying Pages https://www.gatsbyjs.com/docs/creating-and-modifying-pages/

How to Build MultiModal Recommender Systems with Tensorflow

How to Build MultiModal Recommender Systems with Tensorflow How to Render Jupyter Notebooks in Your Web (React) App

How to Render Jupyter Notebooks in Your Web (React) App How to Implement Extractive Summarization with BERT in Pytorch

How to Implement Extractive Summarization with BERT in Pytorch Why I moved my personal website to Gatsby.js + Github

Why I moved my personal website to Gatsby.js + Github Introducing Peacasso: A UI Interface for Generating AI Art with Latent Diffusion Models (Stable Diffusion)

Introducing Peacasso: A UI Interface for Generating AI Art with Latent Diffusion Models (Stable Diffusion) How to Finetune BERT for Text Classification (HuggingFace Transformers, Tensorflow 2.0) on a Custom Dataset

How to Finetune BERT for Text Classification (HuggingFace Transformers, Tensorflow 2.0) on a Custom Dataset