2022 Year in Review

2022 has been a fairly interesting year! October 2022 made it exactly 1 year at Research Software Engineer, @MSR. It has been a period of intense learning and growth. I've been fortunate to work with amazing, generous colleagues and learn from them. A technical highlight of the year has been getting to make some contributions to GitHub CoPilot - a tool that has changed how developers write code today. I got to work on studying evaluation metrics for code generation models and new metrics for evaluating the quality of generated code compeletions.

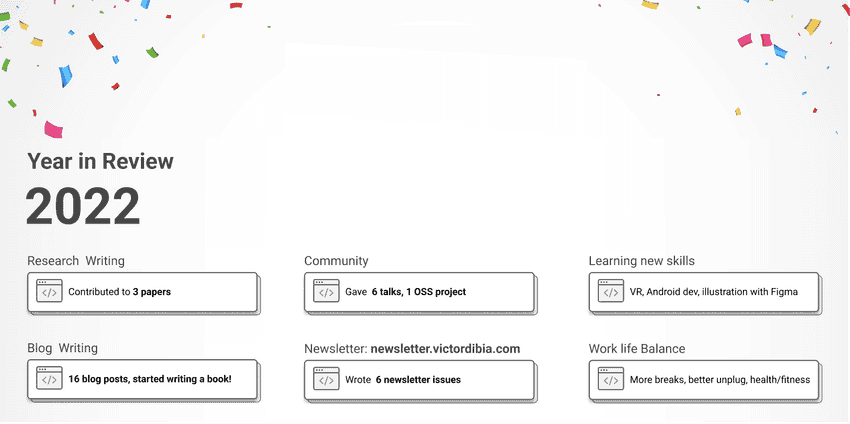

My goal for 2022 (see my 2021 year in review post) was to do more research writing, blog writing, community work, learn new skills and improve work life balance.

Progress was made.

I am excited to see what the next year brings!

Research Writing

I got to write papers on some of the research I conducted in the last year as well as independent research. Thankfully, my current role as a Research Software Engineer has been beneficial to this goal. I contributed to 3 papers this year.

Victor Dibia , Adam Fourney , Gagan Bansal , Forough Poursabzi-Sangdeh (SHE/HER) , Han Liu , Saleema Amershi Aligning Offline Metrics and Human Judgments of Value of AI-Pair Programmers. arXiv preprint arXiv:2210.16494 (2022)

In this paper, we study how offline metrics used to evaluate code generation models (e.g., accuracy metrics such as the fraction of generated code that passes unit tests) align with human judgements of value in the pair programmer setting. Based on a study with 49 experienced developers, we found that in 42% of cases where generated code is not perfectly accurate, developers still found it valuable. We also found that when the effort associated with fixing code is low, then it is deemed to be more valuable. We also propose a new metric that combines accuracy metrics and code similarity metrics and show that it is better correlated with human judgements of value.

Gonzalo Ramos , Rick Barraza , Victor Dibia , Sharon Lo. AThe Aleph & Other Metaphors for Image Generation. NeurIPS 2022 Workshop on Human-Centered AI (2022).

In this position paper, we reflect on fictional stories dealing with the infinite and how they connect with the current, fast-evolving field of image generation models. We draw attention to how some of these literary constructs can serve as powerful metaphors for guiding human-centered design and technical thinking in the space of these emerging technologies and the experiences we build around them. We hope our provocations seed conversations about current and yet to be developed interactions with these emerging models in ways that may amplify human agency.

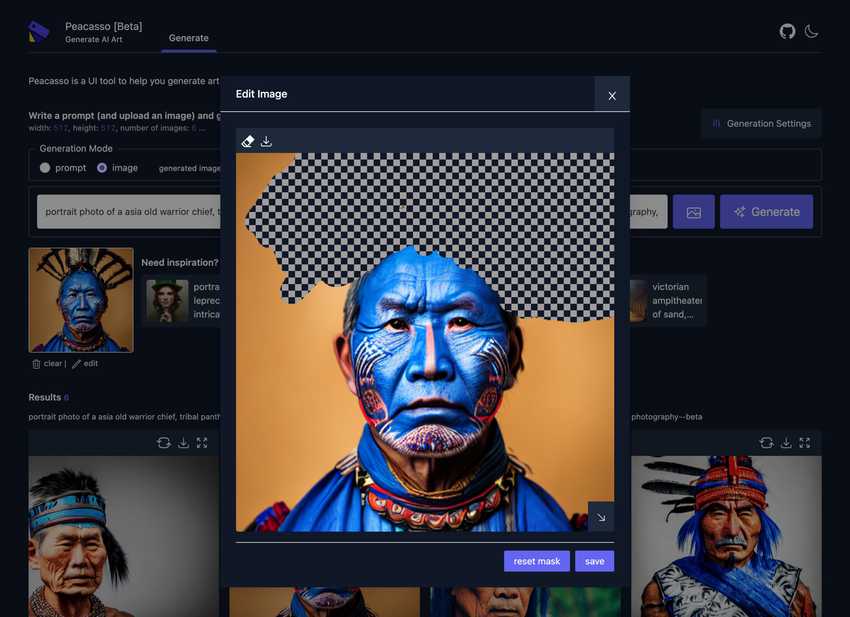

Victor Dibia Interaction Design for Systems that Integrate Image Generation Models: A Case Study with Peacasso. Work in progress.

In this paper, I explore guidelines for designing interfaces that integrate image generation models (igm's). First, I draw on theories from communications (media richness theory) and Human-AI interactions in deriving a set of high level guidelines - designing for for human centered controls, rapid feedback, personalization, transparency, capability discovery and harms mitigation. Next, I discuss Peacasso - an open source user interface that implements these guidelines, with features including an image generation editor (text/image prompting, inpainting, outpainting), process curator, control mechanisms (prompt remixing, latent space navigation) and sense making tools (prompt preview, explanations, latent space explore). I demonstrate the utility of the Peacasso api in building novel user interactions by implementing a set of high level application use cases (e.g., presets for tiling, video generation and story generation).

Blog and Writing (I am writing a book! )

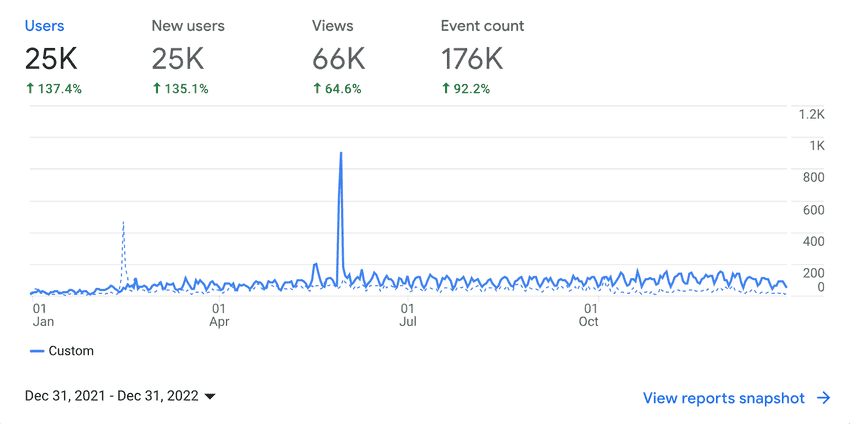

I wrote 16 blog posts this year. This included posts on web (e.g., rendering jupyter notebooks on the web, rendering blender models on the web, ), machine learning (implementing gradient explanations for Tensorflow BERT models, extractive summarization, research paper reviews etc), and general topics (e.g., trends in ML).

I also started writing a monthly newsletter (wasn't as consistent as I'd hoped, but some progress was made!). I have written 6 newsletter issues so far, with about 100 subscribers.

I started writing a book (learn more here). Something that has been on my mind for a while now is a realization on the growing importance of user experience as a driver for competitive advantage in the ML space. Tools and services like Huggingface and OpenAI have democratized many ML tasks, making it possible for any team to address a wide set of tasks. However, weaving the raw technical capabilities fo these tools into an experience that creates value for the user is a skillset that is not immediately obvious to teams. I am writing a book that explores this idea and hopefully get it done by December 2023 (yeah .. thats ambitious and I intent to be accepting of whatever progress I can make!).

Community

Made some progress on reconnecting with the ML community! 6 talks, co-organized 1 conference workshop, contributed 1 open source library.

Conferences and Talks

- Conferences. I helped co-organize the Machine Learning Efficiency workshop at the 2022 Indaba conference. I didnt get to attend in person this year, but hoping I'd get to do that next year. I also got to attend and present at the 2022 Google Developer ML Summit (this was such a great experience, thanks to the organisers!!).

- Talks: I got to also give a few talks this year. Trends in ML at the Chinese University of Hong Kong, Code Generation Models and Developer Productivity at Deakin University, ML on Android at Google Developers Machine Learning Bootcamp, Intro to ML at Technology University Jamaica.

OSS Contribution

Peacasso is definitely the most exciting OSS project I worked on this year. Diffusion models have shown impressive performance on text-to-image generation and continue to get better - higher image quality, smaller model size, faster generation. All of these make these models ready for consumer applications. However, the right user experience (e.g., how do we reduce trial and error for users, improve user efficiency) is still a challenge. Peacasso is an attempt to address this challenge. Along this journey, I learned alot about how diffusion models work, usability challenges associated with the models, and also attempting to serve these models at scale.

Peacasso is both a test bed to experiment with UI ideas and a research tool that others can build with. It achieves this by providing a user interface and a back end python api. Learn more about Peacasso and it's goals here. It is an ongoing project and I hope to keep improving it in the coming year.

I also worked on a few other "things" that are not yet ready for prime time - mostly on tools for evaluating code generation models. I hope to get to them in the coming year.

Learning New Skills

This year, I spent some time getting familiar with VR (I learned to build basic VR experiences on the Meta Quest 2 VR headset and wrote about it here), revisited Android development with Jetpack Compose (I revisited building Android apps with Compose, running local ML models on Android and wrote about it here), learned to use Figma (e.g., diagrams in the Peacasso paper draft are created with Figma.), and spent time learning to write software that is more usable and more robust (tests, typing in python, python packaging, designing authentication for web apis etc).

There has also been an explosion in two aspects of ML in 2023 - LLMs (GPT series, ChatGPT) and image generation models (Stable Diffusion et al). Building with these models both as part of my personal experimentation and official work has also been a great learning experience.

Work Life Balance

There are many different ways to define work life balence or measure improvements in work life balance. For me, this year, I invested in spending quality time with my family (especially experiences with our son who is 3.5 yrs old already! Time flies!). One way I measured progress (quality), is by ensuring I took real breaks where I truly did unplug (no phones, no side projects) from all type of work (something I did not do previously). I traveled more (3 international trips compared to 0 last year, interstate trips). There was also some progress on fitness and health domain (I can how hold a 5 second front lever pose for the first time, and do a half decent bridge). I am fortunate to be at a point in my career where I am able to do this. I hope to continue to do this in the coming year.

Cheers to a Better New Year Ahead!

For 2023, I'd like to continue improving on the same things - research, writing, community contributions, learning new things, personal health/fitness.

Cheers to everyone who made it to the end of the year. You did great, you are awesome, you are appreciated, you rock! Happy New Year!

2020 Year in Review

2020 Year in Review 2019 Year in Review

2019 Year in Review 2021 Year in Review

2021 Year in Review 10 things I have loved about Microsoft (3 years in!)

10 things I have loved about Microsoft (3 years in!) 2023 Year in Review

2023 Year in Review Top 10 Machine Learning and Design Insights from Google IO 2021

Top 10 Machine Learning and Design Insights from Google IO 2021