U2Net Going Deeper with Nested U-Structure for Salient Object Detection | Paper Review

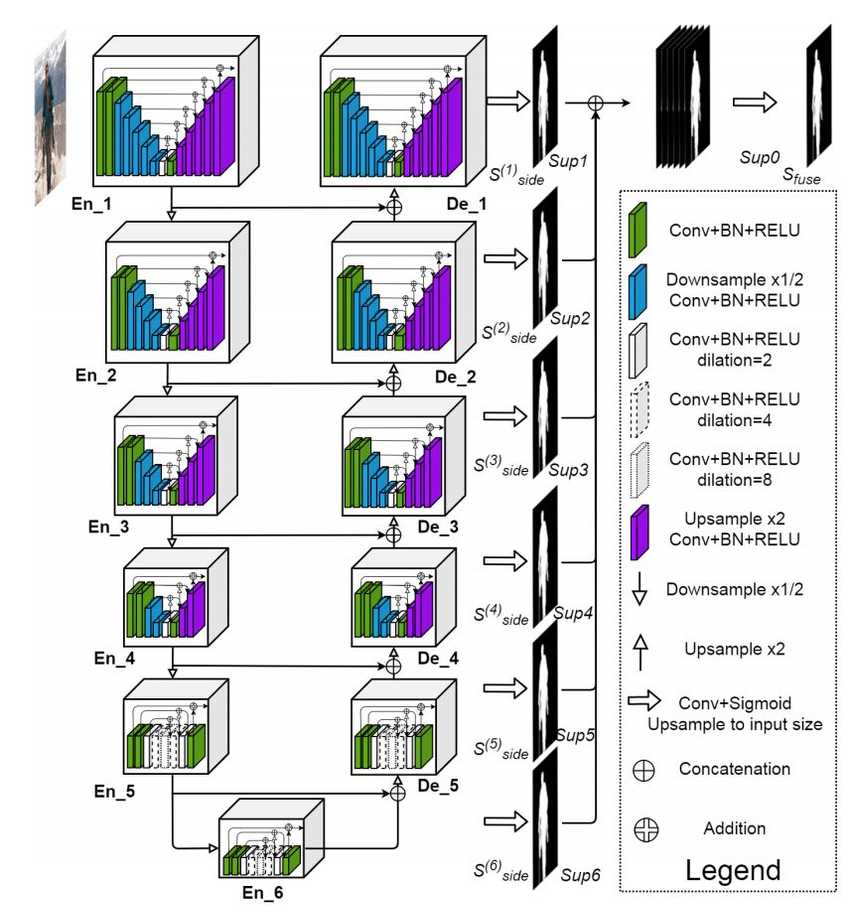

This post is a summary of the paper by Qin et al 2020[^1] where they proposes a deep UNet like model (pretty much a UNet, but made up of UNet-ish blocks) for salient object detection (the task of segmenting the most visually attractive objects in an image).

Overview and Technical Contributions

Salient Object Detection

Salient object detection is the task of segmenting out the visually interesting aspects of an image. While this problem feels simple (we dont need to identify multiple segments or label them), it is also open ended and non-trivial (we need to know what it means for something to be interesting and the boundaries for this interesting thing). How do we define interesting and how do we identify what constitutes the boundaries for each salient objects.

Existing Approaches

An intuitive approach here is to explore transfer learning - rely on pretrained models as backbones which understand images enough to solve classification problems, and leverage its set of learned features in predicting salient pixels. The majority of existing models for salient object detection take this approach. However, pretrained models are FLOP intensive. They also might encode a large amount of information that is not particularly useful for segmentation. More importantly, they are not particularly designed to encode both global information and local detail (which is required for finegrained segmentation). So .. can we do better?

The U2Net Approach

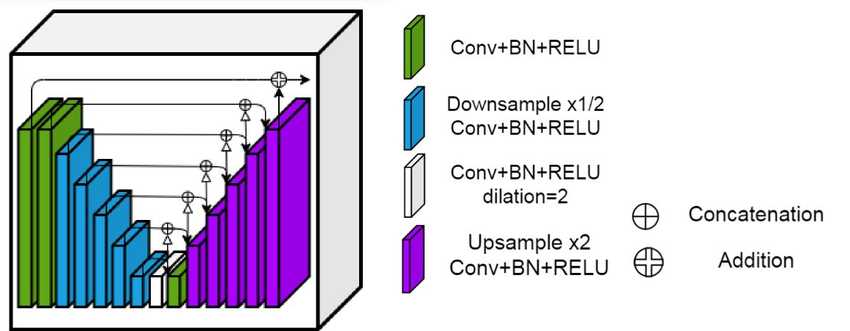

Perhaps the most interesting contribution of this paper is the introduction of residual U-blocks and the ablation studies that show they indeed improve performance metrics. Their intuition is that the residual connections within each UNet block enables focus on local details while the overall residual U-Net architecture enables fusing these local details with global (multi scale) contextual information.

- Residual U-Block. capture intra-stage multi-scale features.

- Features are downsampled within the U Structure. most operations are applied on the downsampled feature maps.

Verdict - 8/10

- Good motivation

- Clear writing

- Reproducible

- Lends itself to real world use cases (small models, good examples)

Applied Reserach Implications

As an applied ML researcher, I tend to always evaluate ML papers in terms of how can these ideas improve existing problem solutions? . Does it achieve a net positive while balancing critical latency and accuracy tradeoffs? . I think this paper does well.

The ideas introduced suggest that the standard transfer learning practice of reusing pretraine model backbones is inefficient for some problems. For tasks where we probably do not need specific knowledge or concepts (e.g. segmentation without classification in this case, or a small set of classes) and for which we have sufficient training data, we might get improved performance and at lower computational costs. Building on this, it might be worthwhile to consider the U2Net architecture for problems such as

- Landmark segmentation (segmenting landmarks, vegetation etc from satelite imagery)

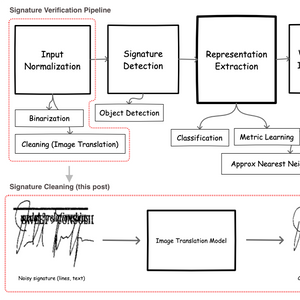

- Signature recognition. Model is optimized to learn both fine local as well as global details which is potentially useful for signature matching.

References

[^1]: Qin, Xuebin, et al. "U2-Net: Going deeper with nested U-structure for salient object detection." Pattern Recognition 106 (2020): 107404.

State of Deep Learning for Object Detection - You Should Consider CenterNets!

State of Deep Learning for Object Detection - You Should Consider CenterNets! Real-Time High-Resolution Background Matting | Paper Review

Real-Time High-Resolution Background Matting | Paper Review Recent Breakthroughs in AI (Karpathy, Johnson et al, Feb 2021)

Recent Breakthroughs in AI (Karpathy, Johnson et al, Feb 2021) ART + AI — Generating African Masks using (Tensorflow and TPUs)

ART + AI — Generating African Masks using (Tensorflow and TPUs) Top 10 Machine Learning and Design Insights from Google IO 2021

Top 10 Machine Learning and Design Insights from Google IO 2021 How to Speed Up Neural Network Training with Intel's Gaudi HPUs. A Tensorflow 2.0 Object Detection Example

How to Speed Up Neural Network Training with Intel's Gaudi HPUs. A Tensorflow 2.0 Object Detection Example Signature Image Cleaning with Tensorflow 2.0 - TF.Data and Autoencoders

Signature Image Cleaning with Tensorflow 2.0 - TF.Data and Autoencoders 2023 Year in Review

2023 Year in Review