• 1 min read

CodeXGLUE: A Machine Learning Benchmark Dataset for Code Understanding and Generation | Paper Review

A review of the March 2021 paper on CodexGLUE, a dataset for code understanding and generation

It has been great to see the machine learning community begin to pay attention to dataset creation and allocate conference and workshop tracks to it (see Neurips 2020 and 2021 workshop on datasets). I have recently been looking at deep learning for program synthesis and this post is my brief review on the CodexGLUE dataset paper which focuses on code generation and code understanding tasks.

Review Notes

Note: This review aims to provide a high level summary for an applied audience. The avid reader is encouraged to explore additional resources below.

Introduction

This paper contributes a dataset benchmark for code understanding and generation tasks as well as baseline models.

Background

Notes on motivation and background for this work.

By developing models that understand code, we can improve developer productivity

The authors refer to the broad space of creating tools and models that understand code as code intelligence (automated program understanding and generation). These models can then be applied to a slew of downstream coding related tasks.

Method

Methods, experiments and insights

A list of 10 code intelligence tasks

This paper describes 10 code understanding and generation tasks.

1.) Clone detection (semantic similarity)

2.) Defect detection (predict if code contains bugs)

3.) Cloze test (predict masked token given code snippet)

4.) Code completion (predict next tokens given some context)

5.) Code repair (produce bug free version of code)

6.) code-to-code translation (translate from one programming language to another)

7.) Natural language code search (retrieve code based on text query, predict if code answers a NL text query)

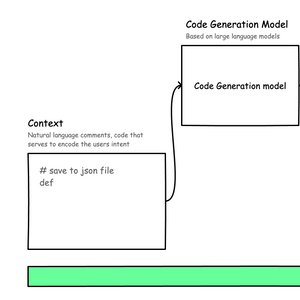

8.) Text- to-code generation (explicitly generate code given some natural language query)

9.) Code summarization (generate natural language summaries or comments given some code)

10.) Documentation translation (translate code documentation from one natural language to another).

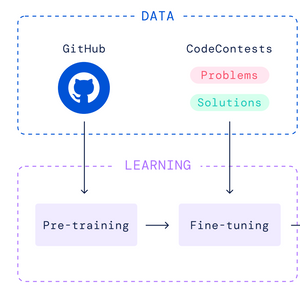

Baseline models

This paper describes results from training versions of 3 baseline models to address the 10 tasks described. They train a

1.) BERT style model for program understanding problems (clone detection, defect detection, cloze test, code search),

2.)GPT-style pretrained model called CodeGPT for completion and generation problems (based on GPT-2) which is trained from scratch on code and has vocabulary based on code tokens. A version of this model (CodeGPTAdapted) is also initialized from GPT-2 which retains GPT-2 vocabulary and language understanding capabilities but finetuned on code.

3.) Encoder-Decoder model for sequence to sequence translation problems.

Key Contributions

A summary on some interesting ideas proposed in this work Dataset source, language and training costs for CodexGLUE. Source: CodexGLUE paper.

Dataset source, language and training costs for CodexGLUE. Source: CodexGLUE paper.

A list of Datasets Relevant to Code Intelligence Tasks

The paper discusses how they curate datasets for each task built from multiple sources. Each of these source datasets are useful for training models for each task.

BigCloneBench (86k files, Java, Clone Detection),

POJ-104 (104 programs, C/C++, Clone Detection),

Devign (27k files, C, Defect Detection)

PY150 (150k files, Python, Code Completion) ,

Github Java Corpus (140k files, Java, Code Completion),

Bugs2Fix (Java, Code repair),

CONCODE (33k projects, Java, Text to Code), and

CodeSearchNet (2.4M functions, 6 languages, Code Summarization, Code Search).

Pretrained Models available on HuggingFace and Insights on Training

The authors make their pretrained models available on HuggingFace. A set of baselines and insights on tokenisation strategies, training paradigms are also provided.

Metrics for Task Performance

1.) Clone detection - MAP, F1

2.) Defect detection - Accuracy

3.) Cloze test - Accuracy

4.)Code completion - Exact match, edit distance

5.) Code search - MRR

6.) Text to code generation - EM, BLEU, CodeBLEU

7.) Code translation - EM, BLEU, CodeBLEU

8.) Code repair - BLEU, CodeBLEU

9.) Code summarization - Smoothed BLEU

10.) Documentation translation - BLEU,

Insights on Relative Model Performance

Results from the baseline experiments in this paper are instructive on the relative performance of multiple model architectures for each task. For example:

CodeBERT model appears to outperform a few baseline models that leverage code AST information on the task of clone detection (Table 5.).

CodeBERT outperforms a RoBERTa and BiLSTM baseline on defect detection.

CodeGPTAdapted which is initialized on GPT-2 outperforms other baselines on text to code and code completion baselines hinting at the value of natural language pretraining to those specific tasks.

Insights on building parrallel copora for code translation and bug detection

Interesting ideas on how to build parallel corpora of code in multiple languages for projects developed in one language and ported to another. Interesting ideas on how to detect buggy code from the Tufano paper … commits having the pattern (bug issue, fix solve) and extract before and after.

Limitations and Open Questions

A summary on some limitations of the paper and open questions

Limited Experiments

More could be done to standardize the experiments reported. For example, on the clone detection task a RoBERTa model trained on natural langauge is compared to a CodeBERT model finetuned on the the CodeSearchNet dataset. Also some commentary as to the suitability of evaluation metrics would be useful. E.g., what are limitations of match based metrics such as exact match, BLEU and even CodeBLEU and how they translate to real world performance?

Reporting on Language Coverage Per Task

One way in which this paper could be improved would be explicit discussions on the coverage of the dataset in terms of languages for each task, and any implications this may have when CodeXGLUE is applied in practice. For example, the datasets for clone detection are sourced from BigCloneBench and POJ 104 which focus on Java and C/C++ only. Similarly the code repair dataset is focused on Java (see section 3.7). It is unclear how well these will generalize to other programming languages (or at least the top 5 programming languages in use today), if at all.

Application Opportunities

Commentary on potential use cases for this work

Code generation research and practice

Most of the tasks described have clear real world applications. For example, code completion can improve the code writing experience within editors (see Codex, the code completion model that powers GitHub CoPilot), code repair is used to fix bugs, and code translation can help in porting programs across multiple languages.

Summary

This paper is valuable for understanding available datasets for multiple tasks and metrics related to code understanding and generation. It is likely that the dataset in it's current form will need some modifications in order to be useful for training competitive models. The authors note continuous planned updates to the dataset to include more languages and additional tasks.

References

List of references and related works/projects.[1]: Chen, M., Tworek, J., Jun, H., Yuan, Q., Pinto, H.P.D.O., Kaplan, J., Edwards, H., Burda, Y., Joseph, N., Brockman, G. and Ray, A., 2021. Evaluating large language models trained on code. arXiv preprint arXiv:2107.03374.

[2]: Austin, J., Odena, A., Nye, M., Bosma, M., Michalewski, H., Dohan, D., ... & Sutton, C. (2021). Program synthesis with large language models. arXiv preprint arXiv:2108.07732.

[3]: Xu, F.F., Alon, U., Neubig, G. and Hellendoorn, V.J., 2022. A Systematic Evaluation of Large Language Models of Code. arXiv preprint arXiv:2202.13169.

[4]: Pradel, M. and Chandra, S., 2021. Neural software analysis. Communications of the ACM, 65(1), pp.86-96.

[5]: Lu, S., Guo, D., Ren, S., Huang, J., Svyatkovskiy, A., Blanco, A., Clement, C., Drain, D., Jiang, D., Tang, D. and Li, G., 2021. CodeXGLUE: A machine learning benchmark dataset for code understanding and generation. arXiv preprint arXiv:2102.04664.

Discuss This Paper

Did you read the CodexGLUE paper and have some thoughts on its contributions/limitations? Let's discuss it on Twitter.

Interested in more articles like this? Subscribe to get a monthly roundup of new posts and other interesting ideas at the intersection of Applied AI and HCI.

Read and Subscribe

Powered by Substack. Privacy Policy.

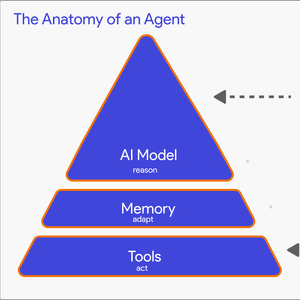

State of Deep Learning for Code Generation (DL4Code)

State of Deep Learning for Code Generation (DL4Code) AlphaCode: Competition-Level Code Generation with Transformer Based Architectures | Paper Review

AlphaCode: Competition-Level Code Generation with Transformer Based Architectures | Paper Review 2023 Year in Review

2023 Year in Review Top 10 Machine Learning and Design Insights from Google IO 2021

Top 10 Machine Learning and Design Insights from Google IO 2021 How to Explain HuggingFace BERT for Question Answering NLP Models with Tensorflow 2.0

How to Explain HuggingFace BERT for Question Answering NLP Models with Tensorflow 2.0 The Agent Execution Loop: Building an Agent From Scratch

The Agent Execution Loop: Building an Agent From Scratch How to Implement Gradient Explanations for a HuggingFace Text Classification Model (Tensorflow 2.0)

How to Implement Gradient Explanations for a HuggingFace Text Classification Model (Tensorflow 2.0)