Top 10 Machine Learning and Design Insights from Google IO 2021

This post is a summary of my top 10 design and machine learning insights discussed at Google IO 2021. In this list, I'll focus on announcements that border on two topics - Machine Learning and HCI/Design. Before I jump in .. some general stats and announcements:

- Android is now on 3 billion devices.

- Google Lens translates over 1 billion words every day

- Tensorflow lite is used on over 4 billion devices, MLKit has 500 million active monthly users and Tensorflow.js has 50 million CDN hits.

- TPUv4 pods are now released and they are 2x faster than TPUv3.

- Google announced Project Starline - live 3D video conferencing. Capturing and streaming high resolution 3D data (100s of GBs per minute) is hard. They achieve this via novel compression (100x) and streaming algorithms.

- Tree based Models in Tensorflow - Tensorflow Decision Forests (TFF-DF). TF-DF is a collection of production-ready state-of-the-art algorithms for training, serving and interpreting decision forest models (including random forests and gradient boosted trees). You can now use these models for classification, regression and ranking tasks - with the flexibility and composability of the TensorFlow and Keras.

Now to the top 10 list.

LaMBDA - An Open Domain Conversational Agent

Google's Language Model for Dialogue Applications. Photo, Google.

Google's Language Model for Dialogue Applications. Photo, Google.Google is experimenting with language models for open domain conversation - Language Model for Dialogue Applications aka LaMBDA. This is interesting as open domain conversation is ** is notoriously hard [^1] **. LAMBDA parses existing data corpuses on multiple domains and generates responses. Once the rough edges are smoothed out (its still in research phase and has quirks) Google hopes to apply it to products like Google Assistant, Search, Workspaces, making them accessible and easier to use.

LaMBDA is built on a transformer based model trained on dialogue data, with an earlier version described in this paper[^2].

Multi Modal Search

Multi modal models can embed data across multiple modalities (text across multiple languages, images, speech, videos, routes etc) into a shared semantic space and enable new use cases. Photo, Google.

With neural networks, an interesting trend is the use of multi modal models that can embed data across multiple modalities (text across multiple languages, images, speech, videos, routes etc) into a shared semantic space. If we can do this, we can enable interesting usecases such as cross modal retrieval (e.g. show me all parts of a video that are related to this text query). Or as Google mentions multi modal models allow new types of interesting queries - "show me a route with beautiful mountain views" or "show me the part in the video where the lion roars at sunset".

Google talked about an implementation - Multitask Unified Model (MUM) based on a transformer model but 1000x more powerful than BERT. MUM can acquire deep knowledge of the world, generate language, is trained on 75+ languages and understands multiple modalities

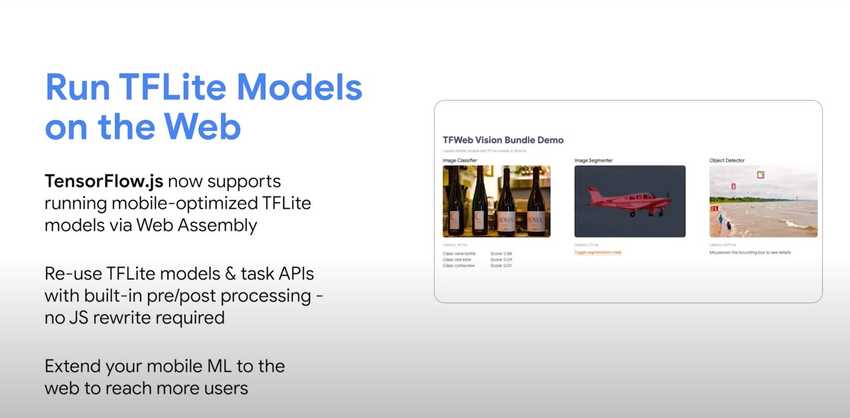

Tensorflow.js and Tensorflow Lite - Improved Performance, TFLite Integration

I have worked with Tensorflow.js extensively in the past and I am quite excited about the recent updates.

-

Tensorflow Lite models will now run directly in the browser. With this option, you can unify your mobile and web ML development with a single stack.

-

Tensorflow lite is bundled with Google Play Services which means your Android app apk file is smaller.

-

Profiling tools. TensorFlow Lite includes built-in support for Systrace, integrating seamlessly with perfetto for Android 10. For iOS developers, TensorFlow Lite comes with built-in support for signpost-based profiling.

-

Model Optimization Toolkit

. The TensorFlow Model Optimization Toolkit is a suite of tools for optimizing ML models for deployment and execution.

- Reduce latency and inference cost for cloud and edge devices (e.g. mobile, IoT).

- Deploy models to edge devices with restrictions on processing, memory, power-consumption, network usage, and model storage space.

- Enable execution on and optimize for existing hardware or new special purpose accelerators.

-

MLKit . ML Kit brings Google’s machine learning expertise to mobile developers in a powerful and easy-to-use package. Make your iOS and Android apps more engaging, personalized, and helpful with solutions that are optimized to run on device. MLKit (for Android and iOS) now allows you to host your model such that they can be updated without updating your app!

ML and Health

There were several interesting ML and Health Applications.

- Using AI to improve breast cancer screening. Google, in collaboration with clinicians, are exploring how artificial intelligence could support radiologists to spot the signs of breast cancer more accurately. Learn more here

- Improving tuberculosis screening using AI. To help catch the disease early and work toward eventually eradicating it, Google researchers developed an AI-based tool that builds on their existing work in medical imaging to identify potential TB patients for follow-up testing. Learn more here

- Using the android image camera to identify skin conditions. The user uploads 3 photos of the area and a model provides a list of possible conditions and links to learn more. This app has marked as CE Class I medical device in the EU. Learn more here .

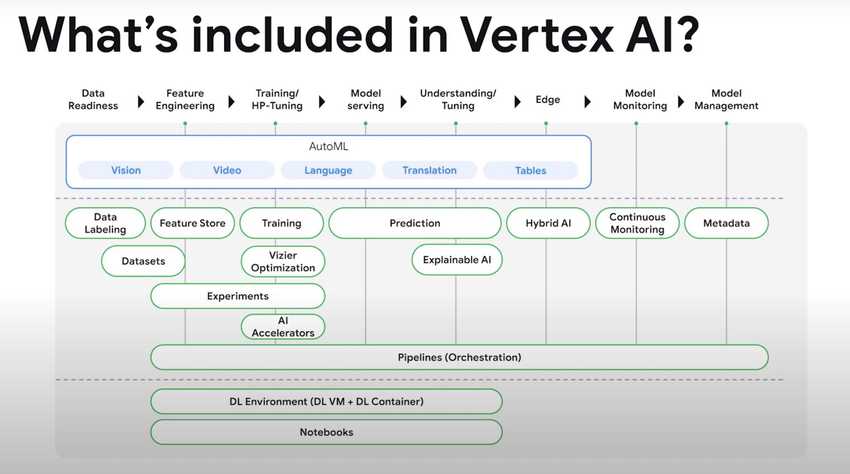

Vertex AI

Google introduced a new product - Vertex AI and describe it as a managed machine learning (ML) platform that allows companies to accelerate the deployment and maintenance of artificial intelligence (AI) models. The goal here seems to be focused on streamlining tasks within the AI product lifecycle (which have been previously scattered across multiple GCP services). From the look of things, Vertex AI folds in all of the functionality of the previous GCP AI Platform product - "Vertex AI brings AutoML and AI Platform together into a unified API, client library, and user interface."

They claim that Vertex AI requires nearly 80% fewer lines of code to train a model versus competitive platforms, enabling data scientists and ML engineers across all levels of expertise the ability to deploy more, useful AI applications, faster.

Automated Design Palettes/Elements for Android

By looking at the pixel content of your wallpaper image, Google will suggest a matching color palette for the entire Android experience. Dubbed as "Material You" , it ships with the new Android 12 OS.

Giving users ML and Making it Controllable

ML and ML-powered recommendations can be useful. For example, consider the helpful feature in Google photos that curates interesting memories and allows you revisit and share them - I love it! However, ML models often optimize for the average use case or average user. This can result in suboptimal or even painful experiences for edge cases - e.g. memories from a time you'd like to forget - ouch! Whats worse .. if you can't control it.

Google is attempting to change that by giving users control over how these sort of helpful ML features work. What does it mean to give users meaningful control? Here's some ways in which Google is implementing this:

- Ability to control memories in Google Photos. Controls to hide photos of a certain time period or persons, rename highlights or remove them altogether, remove individual photos from memories.

- Managing location history settings. Users are reminded that location recommendations in maps are because they have location history on and gives them a link to modify that setting.

Privacy - Android Privacy, Privacy Sandbox, Differential Privacy Library

Google IO had several interesting announcements on privacy across multiple products. Some the ones I found really interesting were:

- Android Privacy dashboard: a list of apps that have accessed microphone, camera and location in the last 24hrs.

- Android Private Compute Core: Use a private compute core to processs private data needed for intelligent features such as app suggestions, now playing, live caption, screen attention, smart reply etc which run locally. This private compute core is isolated from the rest of the OS, from other apps and from the network making it secure and confidential.

Learn more about new announcements in Android privacy in this short 15 minute video.

- Password locks for specific photos in the Google Photos app.

- Privacy Sandbox. The Privacy Sandbox initiative aims to create web technologies that both protect people’s privacy online and give companies and developers the tools to build thriving digital businesses to keep the web open and accessible to everyone. Some goals include

- Prevent tracking as you browse the web.

- Enable publishers to build sustainable sites that respect your privacy.

- Preserve the vitality of the open web.

learn more here - https://privacysandbox.com/

- Google's Differential Privacy Library implementations are available on Github.

Strides in Accessibility, Inclusivity and Responsible AI

Across the of Google IO, there were a few interesting projects that touched on accessibility and inclusivity. Which is great!

- Android/Pixel live caption. With a single tap, Live Caption automatically captions speech on your device. Use it on videos, podcasts, phone calls, video calls, and audio messages – even stuff you record yourself. At Google IO a really nice use case showed how it allowed deaf individuals make phone calls.

- Improved auto white balance for users with darker skin tone. Google is collecting more data for users with black and brown skin to ensure its algorithms makes less mistakes (e.g. over brightening, desaturation etc) for these users.

- Project Guideline - a research project that explores using computer vision to enable blind individuals run without assistance.

- People + AI Guidebook 2.0 . The People + AI Guidebook is a set of methods, best practices and examples for designing with AI.

- Unlock your car with your Android device! Google is working with auto makers to create a digital key that allows you securely lock, unlock and start a vehicle from their Android smartphone. The digital car keys will become available on select Pixel and Samsung Galaxy phones later this year, according to Sameer Samat, VP of PM for Android & Google Play.

- Know your data - A tool to understand datasets with the goal of improving data quality, and helping mitigate fairness and bias issues. I really appreciate the design thought and engineering that went into this tool - visualizes properties of very large datasets (e.g. the entire ImageNet, MSCoco and 70+ datasets)

Wearables - Google WearOS + Samsung Tizen + Fitbit

Google announced a new unified wearable platform that combines the best of WearOS, in collaboration with Samsung Tizen and also hardware/software advances from Fitbit (recently acquired by Google). Features I found very interesting were the focus on battery life, improved sensors, watch only functionality (e.g. music, maps without phone connection).

Wrist worn wearable devices continue to be the more successful class of wearables; this partnership/unification and new features could see it realize its true potential.

Conclusions

A lot of things were announced at IO this year - I definitely did not cover them all. Its really impressive how much innovation and progress is made every year. Overall, I really loved the format of each breakout session - 15 minutes that summarize the most important updates! I found this to be considerate of the viewers time.

References

[^1]: Roller, Stephen, et al. "Open-domain conversational agents: Current progress, open problems, and future directions." arXiv preprint arXiv:2006.12442 (2020). [^2]: Adiwardana, Daniel, et al. "Towards a human-like open-domain chatbot." arXiv preprint arXiv:2001.09977 (2020).

2023 Year in Review

2023 Year in Review 10 Predictions on the Future of Cloud Computing by 2025 - Insights from Google Next Conference

10 Predictions on the Future of Cloud Computing by 2025 - Insights from Google Next Conference 2021 Year in Review

2021 Year in Review Recent Breakthroughs in AI (Karpathy, Johnson et al, Feb 2021)

Recent Breakthroughs in AI (Karpathy, Johnson et al, Feb 2021) 2018 Year in Review

2018 Year in Review 2024 Year in Review

2024 Year in Review 2025 Year in Review

2025 Year in Review 2022 Year in Review

2022 Year in Review