• 1 min read

AlphaCode: Competition-Level Code Generation with Transformer Based Architectures | Paper Review

A review of the Feb 2022 AlphaCode paper from DeepMind".

DeepMind recently announced the release of AlphaCode - an encoder-decoder transformer model for generating code (solutions to competitive programming problems). This post is a brief review of their paper.

Review Notes

Note: This review aims to provide a high level summary for an applied audience. The avid reader is encouraged to explore additional resources below.

Introduction

AlphaCode applies an encoder-decoder transformer based model to the task of generating solutions for competitive programming problems.

Background

Notes on motivation and background for this work.

LLMs work well for code generation

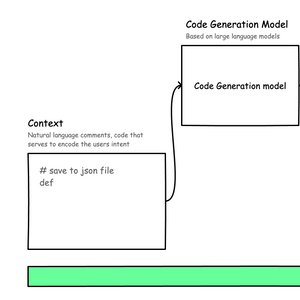

Large language models have shown competitive results when applied to the task of code generation i.e., translating natural language problem statements to source code in a general purpose language (e.g., javascript, python, c++).

Generating snippets vs full programs

Existing projects like Codex[1] focus on translating natural language descriptions (comments) to code. The authors claim that generating full programs require - 'understanding complex natural language descriptions, reasoning about previously unseen problems, mastering a wide range of algorithms and data structures, and precisely implementing solutions that can span hundreds of lines'. Hence, generating 'full programs' is a 'significant step forward' and 'more complex'

Differentiation from existing work (Codex)

Codex[1] is the program synthesis model from OpenAI that powers GitHub Copilot (https://copilot.github.com/). While Codex focuses on general purpose program completion, AlphaCode seems to focus specifically on programming contests. While Codex is based on a multilayer decoder-only model (GPT-3), AlphaCode is based on an encoder-decoder model architecture. A recent paper [2] from Google evaluating LLMs for code generation also use a decoder-only setup.

Method

Methods, experiments and insights

Encoder-decoder model

The authors propose an encoder-decoder model architecture of various sizes (300M, 1.1B, 2.8B, 8.7B, 41.1B). Asymmetric architecture 1536 tokens in encoder, 768 tokens in decoder. SentencePiece tokenizer trained on GitHub + CodeContest dataset with 8,000 tokens. Same tokenizer is used for encoder and decoder, across both programming languages and natural language

Pretraining on GitHub

The model is pretrained on all Github code snapshot on 2021/07/14 including languages like C++, C#, Go, Java, JavaScript, Lua, PHP, Python, Ruby, Rust, Scala, and TypeScript. 715.1GB of code.

Fine-tuning on the CodeContest dataset

Model is finetuned on the CodeContest dataset assembled by the authors. CodeContests (https://github.com/deepmind/code_contests) is a competitive programming dataset for machine-learning. It consists of programming problems, from a variety of sources: CodeNet, Description2Code, and Codeforces.

Metadata and value conditioning

In addition to the problem statement, the model also uses metadata found in a programming contests dataset such as difficulty rating of problem, problem type (e.g. dynamic programming, greedy, etc.), if a solution was correct and a set of initial test cases during training. See section 3.2 for more details.

Large scale sampling (millions) + filtering

They generate several million samples per problem. By varying the metadata fed into the problem (e.g., random tags, ratings etc and high temperature), they can generate a diverse pool of candidate solutions. This initial pool of solutions are then evaluated using tests, removing about 99% of all generated solutions. There are still 100s or 1000s left.

Clustering

This process helps to group semantically similar programs into clusters to avoid selecting programs with similar behaviors/solutions. Once this is done, they sample a solution from each cluster ordered from largest to smallest in a round robin manner. Now, to infer code similarity, the authors train a separate model that generates 'plausible' (but not necessarily correct) input given a problem description and input/output test cases. At clustering time, they use this model to generate input for unseen problems. Programs with similar output for each generated input are deemed to have `similar behaviours` and assigned to the same cluster.

Evaluation metric - n@k

Authors use an n@k metric - percentage of problems solved using n submissions from k samples per problem”. Note that in this paper, k is based on the number of solutions after filtering and clustering the initial set of generated solutions. The correctness of a program (if solved) is checked by executing it on the test cases and comparing the program output with the expected correct output.

Results

Sumary of results

29.6% 10@100k Solve Rate

Using 10@100k, their best model achieves a solve rate of 29.6% on the test set. See Table 5 and Section 5.1 for more details. 'Overall our system achieved an average ranking of top 54.3% limiting to 10 submissions per problem, with an actual average of 2.4 submissions for each problem solved... Our 10 submissions per problem result corresponds to an estimated Codeforces Elo of 1238, which is within the top 28% of users who have participated in a contest in the last 6 months'.

Key Contributions

A summary on some interesting ideas proposed in this work

CodeContest dataset

Authors contribute a curated dataset called CodeContest which could be valuable for iterating of program synthesis research!

Automated test generation

In this work the authors propose a useful approach to generating a broad set of tests for problems in their CodeContest dataset. They argue that false positives are a problem in existing datasets for code generation (i.e., some generated solutions may appear correct because the available test cases are not comprehensive). Their approach is to `mutate` existing input test cases, generate output using 30 accepted/correct programs; mutated inputs are acccepted as test cases if all 30 correct solutions yield the same output.

Methods: Large scale sampling, clustering based on code similarity

The approach proposed by the authors in inferring code similarity which is used for clustering is interesting. Perhaps this setup can also be applied to training models for learning semantic code similarity at scale.

Insights on model analysis

Memorization: Authors compute longest common substring between generations and solutions in the training set and show that the distribution is similar to a human baseline. Their analysis show that the model performs better on python compared to C++ syntax, and fares better on problems like bitmask, sorthing, greedy algorithms, but less with dynamic programming and constructive algorithms. They show that the model also generates deadcode at a rate similar to human baselines.

Limitations and Open Questions

A summary on some limitations of the paper and open questions

Generalizability

While programming problems are a very interesting real world scenario, they may not be particularly representative of the broader generic programming scenario (e.g., where programs need to be familiar with apis, file systems, io, etc to solve problems). To their credit, the authors do acknowledge this in section 8.1. Furthermore competitive programming problems are typically phrased as lengthy stories which might add a level of complexity that might not occur frequently in real world problems. Interestingly, section E.3.1 in the paper shows that simplifying the language for a problem can improve model performance.

Also, the large scale sampling + filtering approach (which is critical to the authors' approach) may result in latency costs that preclude their use in certain usecases.

Test generation and availability of correct solutions

The authors' approach to generating additional tests relies on the availability of multiple (30) correct solutions for each problem in the dataset. This requirement can be difficult to replicate/satisfy outside of competitive programming.

Performance comparison for code snippets vs full programs

A large part of the motivation for this work is hinged on the `complexity` of generating full competitive programming problems. It would be great to explore additional ablation studies that teases apart ways in which (sets of) snippets/functions differ from the full programs. Also it is unclear the distribution of program solutions generated by AlphaCode (e.g., 10s of lines vs 100s of lines).

Comparison with a decoder-only model (Codex)

It is still slightly unclear that an encoder-decoder setup is definitively superior to a decoder-only setup. Table 10 provides some comparisons of a 12B Codex model with a 1B AlphaCode model evaluated on the APPs dataset. When both models try 5 filtered candidates from 1000 generated candidates, Codex achieves a score of 24.5% on simple problems and 3% on the harder competition problems while AlphaCode achieves 14% on the simple problems and 4.58% on the harder competition problems. Note that AlphaCode is explicitly fine-tuned on competition style problems.

Application Opportunities

Commentary on potential use cases for this work

Code generation research and practice

The insights on the behavior of LLMs for program synthesis, the contributed dataset and discussions provided in this paper have clear applications in building tools that support software engineering tasks (e.g., NL2Code, Code-to-Documentation, program generation, code search etc).

Summary

Overall, this paper definitely represents progress in the domain of deep learning for code generation (program synthesis). It is also very well written, easy to read and provides extensive discussions on tricks to make sampling efficient (GOLD training, tempering), limitations of the model, applications and societal impact. IMHO, a couple of interesting areas for improvement are related to training an equally capable model with less information (e.g., without metadata and value conditioning), and perhaps more efficient candidate selection.

References

List of references and related works/projects.[1]: Chen, M., Tworek, J., Jun, H., Yuan, Q., Pinto, H.P.D.O., Kaplan, J., Edwards, H., Burda, Y., Joseph, N., Brockman, G. and Ray, A., 2021. Evaluating large language models trained on code. arXiv preprint arXiv:2107.03374.

[2]: Austin, J., Odena, A., Nye, M., Bosma, M., Michalewski, H., Dohan, D., ... & Sutton, C. (2021). Program synthesis with large language models. arXiv preprint arXiv:2108.07732.

[3]: Xu, F.F., Alon, U., Neubig, G. and Hellendoorn, V.J., 2022. A Systematic Evaluation of Large Language Models of Code. arXiv preprint arXiv:2202.13169.

[4]: Pradel, M. and Chandra, S., 2021. Neural software analysis. Communications of the ACM, 65(1), pp.86-96.

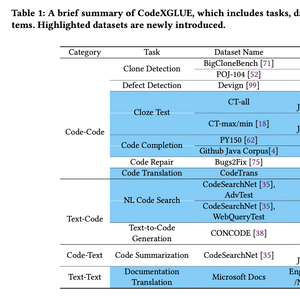

[5]: Lu, S., Guo, D., Ren, S., Huang, J., Svyatkovskiy, A., Blanco, A., Clement, C., Drain, D., Jiang, D., Tang, D. and Li, G., 2021. CodeXGLUE: A machine learning benchmark dataset for code understanding and generation. arXiv preprint arXiv:2102.04664.

[6]: Feng, Z., Guo, D., Tang, D., Duan, N., Feng, X., Gong, M., Shou, L., Qin, B., Liu, T., Jiang, D. and Zhou, M., 2020. Codebert: A pre-trained model for programming and natural languages. arXiv preprint arXiv:2002.08155.

Discuss This Paper

Did you read the AlphaCode paper and have some thoughts on its contributions/limitations? Let's discuss it on Twitter.

1\n I finally got across to reading the AlphaCode paper from @DeepMind in a bit more detail and wrote some of my notes on their method, key contributions, limitations/ open questions. 🧵 https://t.co/3hRMWqaiwM pic.twitter.com/t18Pa366qb

— Victor Dibia (@vykthur) February 19, 2022

Interested in more articles like this? Subscribe to get a monthly roundup of new posts and other interesting ideas at the intersection of Applied AI and HCI.

Read and Subscribe

Powered by Substack. Privacy Policy.

State of Deep Learning for Code Generation (DL4Code)

State of Deep Learning for Code Generation (DL4Code) CodeXGLUE: A Machine Learning Benchmark Dataset for Code Understanding and Generation | Paper Review

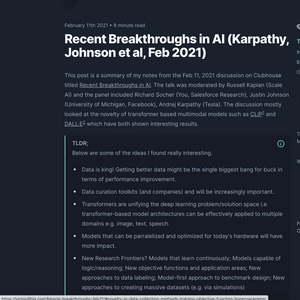

CodeXGLUE: A Machine Learning Benchmark Dataset for Code Understanding and Generation | Paper Review Recent Breakthroughs in AI (Karpathy, Johnson et al, Feb 2021)

Recent Breakthroughs in AI (Karpathy, Johnson et al, Feb 2021) 2023 Year in Review

2023 Year in Review Top 10 Machine Learning and Design Insights from Google IO 2021

Top 10 Machine Learning and Design Insights from Google IO 2021 The Agent Execution Loop: Building an Agent From Scratch

The Agent Execution Loop: Building an Agent From Scratch Neural Extractive Summarization with BERT

Neural Extractive Summarization with BERT ART + AI — Generating African Masks using (Tensorflow and TPUs)

ART + AI — Generating African Masks using (Tensorflow and TPUs)