Whats New in VR 2022? Inside Out Tracking, Passthrough Rendering, Realtime Handtracking

The last time I actively used VR systems was in 2017, working the HTC Vive controller for some experiments with 6 Degrees of Freedom tracking as part of a room scale interactive application (think pointing at/interacting with screens and physical objects in a room). Back then, HTC Vive used its lighthouse tracking system which required base stations installed a room; the base stations emit rapid non visible light in multiple directions, the tethered head mounted display and controllers have photoreceptors which detect the light and uses triangulation to infer the position and orientation of controllers in 3D space. That system was clunky and not portable across space. This setup where external sensors (base stations are used) is typically referrred to as outside in tracking.

I recently tried the Oculus Quest 2 and .. well, things have changed! While this post focuses on the Oculus Quest 2 (which I have found to be the most developer friendly in terms of ease of setup, price point and features), there are other VR headsets with equally great performance (HTC Vive family, Valve Index, HP Reverb G2, Varjo XR-3 family etc).

Stand Alone, Inside-Out Tracking

Tracking with no wires.

Source: Oculus

New devices like the Oculus Quest 2 provide a fully untethered experience - tracks head motion, controllers and hands - wireless, no cables, no base station. And it works! A big part of this huge shift is enabled by a shift from outside in tracking (e.g., using external sensors for location/orientation) to inside out tracking (e.g., using sensors on the device itself to establish location/orientation). A combination of improving sensors (cameras, imus), better SLAM software and improved processor power enable this shift. The Quest essentially digitizes its environment in realtime and uses this model as anchors for tracking its position in space. This is also known as markerless inside-out tracking.

Passthrough Rendering

Source: Oculus

One drawback of VR headsets was that the user was completely immersed in the VR space and could not see their actual physical space. This meant that to move safely in the physical world, you had to rely on calibrated markers in the VR screen, or take off the headset. With Passthrough, you can see your physical space in the VR space - enabling Mixed Reality applications (sort of).

In practice, passthrough rendering is hard and the cameras currently on the Quest 2 are designed for tracking (monochrome, medium resolution) as opposed to capturing and rendering the real world. True Mixed Reality headsets (e.g., Microsoft Hololens, Varjo XR-3 family, Acer, Dell, Samsung, HP built for the Windows Mixed Reality platform for windows 10) achieve this real world + VR blend better.

Realtime 3D Handtracking

Source: Oculus

Advances in machine learning models enables 3d handtracking, effectively allowing users to use their hands as an input device for interating with VR objects. Facebook originally announced handtracking in the Oculus line of devices in Sept 2019 in developer preview. It still not enabled by default (performance constraints). See this post on how handtracking is achieved using only monochrome camera images and machine learning - https://ai.facebook.com/blog/hand-tracking-deep-neural-networks .

Whats Next? Eye Tracking, Face Tracking, Color Passthrough

There are still a bunch of new and interesting innovation opportunities on a headset. You might have noticed that the Quest 2 pass through rendering is currently monochrome - well full color passthrough is on the horizon. Also, eye tracking + face tracking (to maintain fidelity between the user and their avatars expression) is an area that is currently being explored.

Facebook recently (October 2021) announced a high-end VR headset codenamed Project Cambria with a few of these features. And ofcourse there is also the rumored/expected Apple VR headset.

Overall, it's an interesting and growing space!

Hello World VR Application with the Oculus Quest 2 and Unity

Hello World VR Application with the Oculus Quest 2 and Unity Top 10 Machine Learning and Design Insights from Google IO 2021

Top 10 Machine Learning and Design Insights from Google IO 2021 10 Predictions on the Future of Cloud Computing by 2025 - Insights from Google Next Conference

10 Predictions on the Future of Cloud Computing by 2025 - Insights from Google Next Conference Recent Breakthroughs in AI (Karpathy, Johnson et al, Feb 2021)

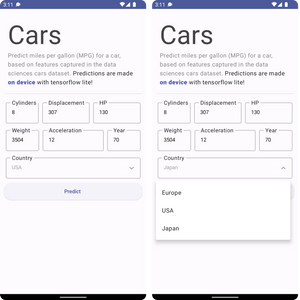

Recent Breakthroughs in AI (Karpathy, Johnson et al, Feb 2021) How to Build An Android App and Integrate Tensorflow ML Models

How to Build An Android App and Integrate Tensorflow ML Models 2019 Year in Review

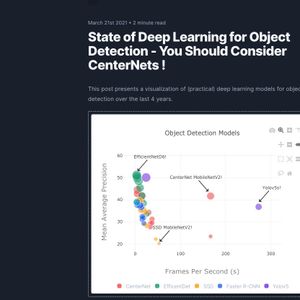

2019 Year in Review State of Deep Learning for Object Detection - You Should Consider CenterNets!

State of Deep Learning for Object Detection - You Should Consider CenterNets! 2025 Year in Review

2025 Year in Review